(This is a follow-up on my portion of the More Secrets of JavaScript Libraries panel at SXSW.)

It’s become increasingly obvious to me that cross-browser JavaScript development and testing, as we know it, does not scale.

jQuery’s Test Suites

Take the case of the jQuery core testing environment. Our default test suite is an XHTML page (served with the HTML mimetype) with the correct doctype. In includes a number of tests that cover all aspects of the library (core functionality, DOM traversal, selctors, Ajax, etc.). We have a separate suite that tests offset positioning (integrating this into the main suite would be difficult, at best, since positioning is highly dependent upon the surrounding content). This means that we have, at minimum, two test suites straight out of the gate.

Next, we have a test suite that serves the regular XHTML test suite with the correct mimetype (application/xhtml+xml). We aren’t 100% passing this one yet, but we’d like to be able to sometime before jQuery 1.4 is ready. Additionally, we have another version that we’re working on that serves the regular test suite but with its doctype stripped (throwing it into quirks mode). This is another one that we would like to make sure we’re passing completely in time for 1.4.

Both of those tweaks (one with correct mimetype and one with no doctype) would also need to be done for the offset test suite. We’re now up to 6 test suites.

We have another version of the default jQuery test suite that runs with a copy of Prototype and Scriptaculous injected (to make sure that the external library doesn’t affect internal jQuery code). And another that does the same with Mootools. And another that does the same for old versions of jQuery. That’s three more test suites (up to 9).

Finally, we’re working on another version of the suite that manipulates the Object.prototype before running the test suite. This will help us to, eventually, be able to work in that hostile environment. This is another one that we’d like to have done in time for jQuery 1.4 – and brings our test suite total up to 10.

We’re in the initial planning stages of developing a pure-XUL test environment (to make sure jQuery works well in Firefox extensions). Eventually we’d like to look at other environments as well (such as in Rhino + Env.js, Rhino + HTMLUnit, and Adobe AIR). I won’t count these non-browser/HTML environments, for now.

At minimum that’s 10 separate test suites that we need to run for jQuery. Ideally, we should be running every one of them just prior to committing a change, just after committing a change, for every patch that’s waiting to be committed, and before a release goes out…

in every browser that we support.

The Browser Problem

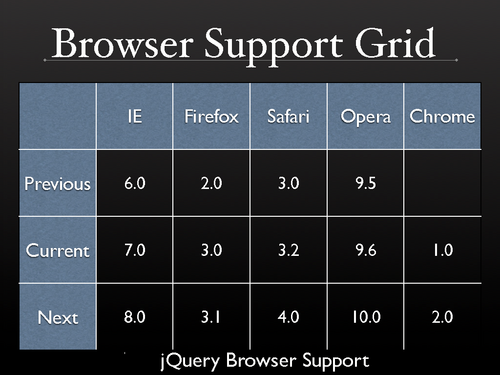

And this is where cross-browser JavaScript unit testing goes to crazy town. In the jQuery project we try to support the current version of all major browsers, the last released version, and the upcoming nightlies/betas (we balance this a little bit with how rapidly users upgrade browsers – Safari and Opera users upgrade very quickly).

At the time of this post that includes 12 browsers.

- Internet Explorer 6, 7, 8. (Not including 8 in 7 mode.)

- Firefox 2, 3, Nightly.

- Safari 3.2, 4.

- Opera 9.6, 10.

- Chrome 1, 2.

Of course, that’s just on Windows and doesn’t include OS X or Linux. For the sake of sanity in the jQuery project we generally only test on one platform – but ideally we should be testing Firefox, Safari, and Opera (the only multi-platform browsers) on all platforms.

The end result is that we need to run 10 separate test suites in 12 separate browsers before and after every single commit to jQuery core. Cross-Browser JavaScript testing does not scale.

Of course, this is just desktop cross-browser JavaScript testing – we should be testing on some of the popular mobile devices, as well. (MobileSafari, Opera Mobile, and possibly NetFront and Blackberry.)

Manual Testing

All of the above test suites are purely automated. You open them up in a browser, wait for them to finish, and look at the results – they require no human intervention whatsoever (save for the initial loading of the URL). This works for a lot of JavaScript tests (and for all the tests in jQuery core) but it’s unable to cover interactive testing.

Some test suites (such as Yahoo UI, jQuery UI, and Selenium) have ways of automating pieces of user interaction (you can write tests like ‘Click this button the click this other thing’). For most cases this works pretty well. However all of this is just an approximation of the actual interaction that a user may employ. Nothing beats having real people manually run through some easily-reproducible (and verifiable) tests by hand.

This is the biggest scaling problem of all. Take the previous problem of scaling automated test suites and multiply it the number of tests that you want to run. 100 tests in 12 browsers run on every commit by a human is just insane. There has to be a better way since it’s obvious that Cross-Browser JavaScript testing does not scale.

What currently exists?

The only way to tackle the above problem of scale is to have a massive number of machines dedicated to testing and to somehow automate the process of sending those machines test suites and retrieving their results.

There currently exists an Open Source tool related to this problem space: Selenium Grid. It’s able to send out tests to a number of machines and automatically retrieve the results – but there are a couple problems:

- As far as I can tell, Selenium Grid requires that you use Selenium to run your tests. Currently no major JavaScript library uses Selenium (and it would be a major shift in order to do so).

- It isn’t able to test against non-desktop machines. Each server must be running a daemon to handle the batches of jobs – this leaves mobile devices out of the picture.

- It can’t test against unknown browsers. Each browser needs special code to hook in to triggering the loading of the browser by Selenium, thus an unknown browser (such IE 8, Opera 10, Firefox Nightly, or Chrome) may not be able to run.

- And most importantly: Selenium Grid requires that you actually own and control a number of machines on which you can run your tests. It’s not always feasible, especially in the world of distributed Open Source JavaScript development, to have the finances to have dedicated machines running non-stop. A more cost effective solution is required.

Naturally, this solution doesn’t tackle the problem of manual testing, either.

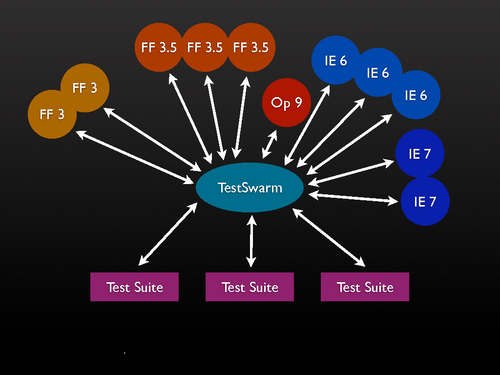

A solution: TestSwarm

All of this leads up to a new project that I’m working on: TestSwarm. It’s still a work in progress but I hope to open up an alpha test by the end of this month – feel free to sign up on the site if you’re interesting in participating.

Its construction is very simple. It’s a dumb JavaScript client that continually pings a central server looking for more tests to run. The server collects test suites and sends them out to the respective clients.

All the test suites are collected. For example, 1 “commit” can have 10 test suites associated with it (and be distributed to a selection of browsers).

The nice thing about this construction is that it’s able to work in a fault-tolerant manner. Clients can come-and-go. At any given time there might be no Firefox 2s connected, at another time there could be thirty. The jobs are queued and divvied out as the load requires it. Additionally, the client is simple enough to be able to run on mobile devices (while being completely test framework agnostic).

Here’s how I envision TestSwarm working out: Open Source JavaScript libraries submit their test suite batches to the central server and users join up to help out. Library users can feel like they’re participating and helping the project (which they are!) simply by keeping a couple extra browser windows open during their normal day-to-day activity.

The libraries can also push manual tests out to the users. A user will be notified when new manual tests arrive (maybe via an audible cue?) which they can then quickly run through.

All of this help from the users wouldn’t be for nothing, though: There’d be high score boards keeping track of the users who participate the most and libraries could award the top participants with prizes (t-shirts, mugs, books, etc.).

The framework developers get the benefit of near-instantaneous test feedback from a seemingly-unlimited number of machines and the users get prizes, recognition, and a sense of accomplishment.

If this interests you then please sign up for the alpha.

There’s already been a lot of interest in a “corporate” version of TestSwarm. While I’m not planning on an immediate solution (other than releasing the software completely Open Source) I would like to have some room in place for future expansion (perhaps users could get paid to run through manual tests – sort of a Mechanical Turk for JavaScript testing – I dunno, but there’s a lot of fodder here for growth).

I’m really excited – I think we’re finally getting close to a solution for JavaScript testing’s scalability problem.

Stefan F (March 20, 2009 at 10:59 am)

Just recently I read a post describing a very similar idea. It was about implementing Google’s Map-Reduce in JS: http://www.igvita.com/2009/03/03/collaborative-map-reduce-in-the-browser/ It includes some sample code, so maybe there’s place for collaboration.

ricardoe (March 20, 2009 at 11:05 am)

Great news! We really need some solutions about this, JS unit tests are kind of primitive.

Thanks for the efforts

Ryan Breen (March 20, 2009 at 11:07 am)

I love this idea. Great work!

Dave Mosher (March 20, 2009 at 11:13 am)

Really excellent idea. If it picks up some grassroots support from the JS development community it could really empower some super-scale level of testing that hasn’t been available before :)

Looking forward to helping alpha test.

Dan Fabulich (March 20, 2009 at 11:37 am)

Speaking as a Selenium developer, I would like to politely ask you (strongly encourage you?) not to go off and code your own thing in TestSwarm.

Selenium is also a dumb JavaScript client that pings the server for additional work. Selenium Grid is just the server-side architecture on top of this. “Selenium Core” is written entirely in JavaScript, and automates the browser with Comet.

That’s also why we support so many browsers, including IE8, including Google Chrome.

Why not join us in making Selenium the cross-browser testing platform you want?

Dan Fabulich (March 20, 2009 at 11:40 am)

Here’s an example Selenium Core test, written with our dumbed-down HTML Selenese language. You can crowdsource these test results, posting them back to a central server, using Selenium today.

http://svn.openqa.org/fisheye/browse/~raw,r=2281/selenium/trunk/src/main/resources/core/TestRunner.html?test=..%2Ftests%2FTestSuite.html&resultsUrl=..%2FpostResults

Jeffrey Gilbert (March 20, 2009 at 11:41 am)

wicked. you’re torrenting unit testing. That’s wicked. Technically you could get 3 machines with 2 VM’s running all the browsers on current, past, and future versions for each platform and have a full test suite available on commodity hardware. Anything else would just be bonus material.

Phil (Instine) (March 20, 2009 at 11:49 am)

Yup. And then there’s HTML 4 strict. And for me fault tolerance against invalid markup. And what about quirks mode in all IE versions, and and and…

If you get this going, I for one will be very interested in being able to use the network you build. My guess is the swarm will end up being more powerful than you need it to be. So allowing others like my self to use your spar cycles would be VERY much appreciated!

John Resig (March 20, 2009 at 11:50 am)

@Dan: I think you’re mis-understanding what is being built here (actually, has already been built, since it’s done at this point). It’s not the test suite or JavaScript client, it’s the code to collect and organize users to help test common code bases. What I’m writing will actually work with Selenium Core (and the test suites of jQuery, Prototype, Scriptaculous, Dojo, Yahoo UI, MooTools, etc.). A current Selenium user will be able to take their suite and run it against dozens of browsers, distributed amongst many users.

Since this code is completely standalone (has no dependencies upon specific test suites, Selenium included) I don’t see a specific advantage to having it be part of the Selenium project.

This isn’t duplicating anything that Selenium has – and certainly Selenium users will be able to take full advantage of it.

Patrick Lightbody (March 20, 2009 at 12:00 pm)

I’m also one of the Selenium developers and I’d love to see us work together on this. I think there may be some confusion about how Selenium works and what it does. It definitely doesn’t require any JS library to “use” Selenium. It works very well with any system – I have tests running against apps build with YUI, Dojo, jQuery, prototype, DWR, etc.

It also works reasonably well with most browsers. While there are launches for specific ones, you can always use the “custom” launcher, which will work with most browser executables, including brand new ones we don’t have a specific browser launch for.

I love the idea of a community-supported pool of browsers and environments for open source projects to build on top of. I could imagine this “open source farm” being useful for all the JS libraries, but also for any open source/free/non-profit/whatever project that has Selenium tests.

lennym (March 20, 2009 at 12:02 pm)

This sounds like a genuinely excellent idea, and a great way for those of us who’d like to be able to contribute to OS projects but lack the *-fu to get involved and give something back to the community.

Volker (March 20, 2009 at 12:06 pm)

a very interesting topic. We jsut implemented a similar thing to run tests and collect test results from various browsers / OS. The advantage of TestSwarm would be that you could tests a lot more installations. Some people have not yet updated their browsers, have different system settings etc. You cannot set up enough machines to get these results (which is especially important for libraries to be stable).

I’d appreciate to get updated on TestSwarm.

John Resig (March 20, 2009 at 12:13 pm)

@Patrick: Not a whole lot of confusion here: Selenium (the client, at least) is a test suite. Just like jQuery’s test suite, Dojo’s, YUI’s, etc. You wouldn’t use Selenium to run through jQuery’s test suite – you’d use it to write Selenium tests that run in Selenium that test a jQuery-using site (as you said). TestSwarm is completely compatible with this – it’s able to take the test results back from Selenium (and other client-side test suites) and pool them in a central location.

That’s a good point about the custom launcher. That’ll help solve mystery browsers on the desktop, at least. Unfortunately mobile browsers are still need some love.

As far as the pool is concerned, I absolutely hope to have it be open to any Open Source project – and not just limited to those using Selenium, but any test setup.

Matt Kruse (March 20, 2009 at 12:45 pm)

Farming out test to real users with real browsers is simply the most robust way to do testing. I’ve dreamed about something exactly like this for years, so it would be very cool if it takes off and works.

Some thoughts, which you probably have thought of already:

1) Be sure to grab as much info from the browser as possible, such as screen resolution, etc. While not completely reliable, it would be useful in analyzing results.

2) Be careful – some browsers have different configurations or options set or add-ons, etc. Some code may fail in one person’s FF3.1, but work just fine for someone else. Hopefully this will not cause more confusion, as developers try to figure out why code fails in one or two tester’s browsers, only to find out that it’s a local configuration issue.

3) I would like to see a general script run on this “bot net” that will catalog all the browsers and their capabilities. To as much of an extent as possible. It would be great to have a distributed network of browsers in every form and configuration building up a database of capabilities, quirks, and behavior.

Hmm, I had more thoughts but I can’t remember them ;)

Patrick Lightbody (March 20, 2009 at 12:51 pm)

John – got it. Yes, if you’re going for a basic JS framework in which Core can run on top of, that’s fantastic! I just wanted to make sure no one thought that the JS libs themselves had to build _with_ Selenium for Selenium to work :)

Over time we might see Selenium expanding outside the JS sandbox. For example, we’ve found a more robust way to type values in to text is to issue OS-level automation commands and rather than trying to simulate a type event from JS.

Do you imagine that over time TestSwam might be able to support some of those types of APIs? Obviously being that it’s a shared env it might be a bit sensitive, but if we can get some sort of standard around a small set of useful automation commands that sit outside the JS sandbox, that’d be really nice!

Thomas Aylott (March 20, 2009 at 1:03 pm)

This is def teh aswomest idea evar!

Asap I’ll be making the MooTools specs and my new SubtleSlickSpeed work with this.

I’ll also be leaving a machine or 5 running through the night for the swarm.

John Resig (March 20, 2009 at 1:13 pm)

@Matt: 1) Good point about grabbing a lot of info. At the moment I’m only collecting useragent but a bunch of other info would definitely be useful as well – besides window dimensions can you think of any other points?

2) I’m concerned about this point, as well. It’s definitely a double-edged sword. I’d love to be able to test jQuery in some bizarre IE 7 + add on setups (ones that I have trouble reproducing) but I wouldn’t want to do it by default. I wonder if we could sub-divide browsers by the plugins/extensions that they have installed (e.g. by default only a clean Firefox would be run, but someone may want to test Firefox+Firebug, Firefox+Noscript, Firefox+Adblock, etc.).

3) That’d be awesome. Being able to push trusted pieces of code out to gather information would be invaluable.

@Patrick Lightbody: I’d absolutely like to be able to expand – and I don’t see why there couldn’t be a common API for this.

@Thomas: Awesome, I’ll definitely be in touch!

h3 (March 20, 2009 at 1:20 pm)

Excellent idea.

It’s kinda funny because last week I had the idea of mixing the jQuery test suite, which I use for a project, with Google Analytics.

It’s possible to create hits on GA with JavaScript and associate data with the created hits. So in theory it would be possible to create a hit for each failed tests and then use GA to see which browser fail which tests, when and with which version of the code.

To create sufficient data for analysis I could simply run the tests on the project page in a hidden frame or somehow ask people to load the test page.

Since GA keeps a lot of metrics (browser version, OS version, etc..) it could gives more context on the tests failures while giving a better big picture of the state of the cross-browser compatibility.

I don’t think I will try to implement it, but I took the idea pretty far in my head and I think it could be an interesting approach to gather and centralize unit tests results, so I though I’ll share it here.

Brian Slesinsky (March 20, 2009 at 2:40 pm)

I think you may be overestimating how much people want to volunteer for manual testing, but perhaps it could be combined with Mechanical Turk?

Justin Meyer (March 20, 2009 at 3:04 pm)

I’m a little confused about how this works exactly.

“Open Source JavaScript libraries submit their test suite batches to the central server and users join up to help out.”

Lets say I wanted to use this w/ the next version of JMVC :). The ideal would be to have any commit immediately push out the latest version and get an email shortly later letting me know everything passed. How does this submit work?

Unless, the client is actually just directing the user’s browser (or internal frame) to the page where I host JMVC’s tests. Somehow I would have to let the the JS Client know the tests are done and the JS Client would simply return to looking for other things to test.

But the following makes that seem unlikely:

“The server collects test suites and sends them out to the respective clients.”

I actually like the idea of just sending the browser somewhere else to test. This would be great for corporate apps that often have a lot of Ajax requests mixes with straight DOM manipulating code.

Of course, security issues might be a concern, but if you are already sending JS to be executed, it’s not making anything worse.

Terry Riegel (March 20, 2009 at 4:21 pm)

@Brian Slesinsky

I think having a test suite like this in place wouldn’t necessarily rely on volunteers as it could give a room full of computers a developer owns using virtualization to run the test suites.

Having volunteers would be great but a person could setup some (maximum) number of virtual machines on one machine to maximize the testing.

Markus (March 20, 2009 at 4:43 pm)

Very good idea!

JS testing always looked a bit clumsy to me, even with the more trivial examples. I’ve realized this while trying to make my own version of jUnit http://github.com/yizzreel/jsunit/tree/master (following Kent Beck’s recommendation of implement xUnit in any language you learn), it wasn’t a big deal to implement though, Prototype works great when you need to create a classes, hierarchy and structure, but I was impressed how rapidly it became from a promising JS testing solution for my projects to a completely useless in real world applications, there was too many different “scenarios” to test.

I can barely imagine the amount of work you’re accomplishing to test jQuery in all systems, browsers and environments possible, it’s a remarkable work indeed.

I’ll follow your TestSwarm project, it looks promising.

Damon Oehlman (March 20, 2009 at 5:04 pm)

Nice work John. This is a great idea and a very innovative solution to a complex problem. Community-powered, test-driven development. Looking forward to participating in the alpha and checking out the results.

John Resig (March 20, 2009 at 5:17 pm)

@Justin Meyer: Your assumption is correct. You leave everything on your own server (so you would do a checkout of the current changeset/revision and give TestSwarm a URL [or URLs] to where the suites are hosted). This way you can still have full control over your tests. In my initial tests this seems to work pretty well (even when dancing around the security issues).

Sylvain Pasche (March 20, 2009 at 5:33 pm)

I don’t know what your plans are for managing the browsers on the client machines, but if some automation is needed in this area I have some pointers to share.

* http://code.google.com/p/browsertests/source/browse/trunk/BrowserTests/browsertests/runner/browser.py: A Python module for launching browsers, maximizing the window, testing if they are still alive and so on. Some parts were inspired by the browsershots project, and there are also useful code in there http://trac.browsershots.org/browser/trunk/shotfactory/shotfactory04/gui/windows.

* Chromium site compare tool: http://src.chromium.org/viewvc/chrome/trunk/src/tools/site_compare/. This is Python and Windows only for the moment. One nice thing is that it can simulate input on the browser (mouse movements, keystrokes, …) without going through JavaScript (something Patrick described above).

* Now I see that Selenium can do something like that too (Launching browsers, creating profiles) (http://tinyurl.com/dy2quu). I don’t know what APIs are available in Java for interacting tightly with the OS in order to do things such as moving windows, listing processes, simulating events. In Python the above projects are using Python win32 on Windows for plugging in the win32 API, appscript/PyObjc on Mac, and the wnck library on Linux.

Mathias Bynens (March 21, 2009 at 7:00 am)

TestSwam is like SETI@home, only way cooler.

Dr Nic (March 21, 2009 at 10:05 am)

John, will TestSwarm include per-browser plugin like fireunit? If I make http://jsunittest.com integrate with fireunit will TestSwarm integration follow nicely from there, with similar APIs in each browser?

mikeal (March 21, 2009 at 1:46 pm)

This is a f’ing great idea.

One of my favorite features, which is rarely used, in buildbot is the fact that the slaves are essentially untrusted so anyone in the community can setup a client if they have a spare machine. This is like that on crack for js tests :)

Out of curiosity, what are you writing the server in?

Some work that I’m doing for windmill2 might fit really well in to this.

John Resig (March 21, 2009 at 2:19 pm)

@Sylvain: Yeah, I don’t explicitly provide any mechanisms for launching, re-launching, or loading URLs in browsers – there’s a bunch of good mechanisms for that already (as you’ve pointed out).

@mikeal: The server is done in PHP (since it’s mostly just a thin layer to updating a MySQL db – and I wanted it to be able to run on the largest number of servers as possible, without limitations).

RV (March 22, 2009 at 10:27 pm)

This is a good approach of testing by crowdsourcing. as you said ,mechanical turk of testing.

Dustin Machi (March 22, 2009 at 11:16 pm)

John,

How about giving instructions/scripts on how participants could launch a separate browser instance with a standardized (or standardized set of profiles that we want to test against). This would have the advantage of avoiding too many custom configs (downsides skipping these tests aside) as well as avoiding interfering with the user’s main browser instance.

Dustin

Nick Tulett (March 23, 2009 at 9:59 am)

Finding ways to run more tests in parallel is like providing a developer with 2 PCs – yes they *could* in theory do their work faster but running tests is not the major time component of testing any more than typing is what developers really spend their time doing.

Yes, this system will allow you to run more tests but will not create tests nor interpret the results.

One acceptable approach to testing systems with many interdependent inputs is simply to test for pair-wise combinations, rather than for all possible combinations. e.g. ensure you have a test (for instance) for each pair of OS and browser, each pair of OS and mimetype and each pair of browser and mimetype, but NOT every combination of OS, browser and mimetype.

It may not be obvious what the difference is from reading that, but the result is *significantly* fewer test cases to run, without taking much of a hit on coverage. It works because a single test case can cover one or more of these test condition pairs. It relies on the empirical evidence that most bugs arise when 2 incompatible conditions co-exist. This is not to say that bugs don’t arise for 3 or more incompatible conditions but these are rare enough to make it too expensive to find them. If you are quality paranoid, or dealing with life-critical systems, please do test everything you can. Just bear in mind that it will take forever.

It’s an interesting exercise in combinatorics to produce the test condition pairs – TCONFIG is available if you want to skip the hard sums.

Graham McKinlay (March 24, 2009 at 9:08 am)

This project sounds particularly intesting. I’ve been using Selenium Grid to help scale the testing of a corporate website. Although farming out our tests to machines in a larger swarm outside the company would be inappropriate and in most cases impossible this project has certainly caught my attention as something to keep an eye on.

Antony Kennedy (March 24, 2009 at 4:46 pm)

Would it be possible to encrypt the traffic somehow? Probably not since it needs to run in a browser. I can think of many companies that would love something like this, but wouldn’t want their pre-production code being seen by the world.

Still – genius.

Peter J Cooper (March 24, 2009 at 8:28 pm)

@John great idea, well done.

@Matt strongly agree the slient stats will be important, also show strategic industry bottlenecks about which apps are having trouble where and how fast they are fixed, becomes a more strategic ‘over the horizon radar’ for how responsive dev teams are.

@h3 is on to something with the google analytics links here, not sure the exact angle but leveraging their continued sophistication in an open way would get a whole bunch of network effects going eg. benchmarking.

Great idea and good open execution, nice one.

Bryce Ott (March 25, 2009 at 12:21 am)

I’m interested to know what, if any, safeguards may be in place or planned for the protection of clients volunteering their browser to run foreign JS. Is that dependent simply on trusting who runs the TestSwarm server? That is probably fine in many use cases, but what if there is some ‘TestSwarm Authority’ so to speak as it sounds like may be planned, that allows developers to post their code and let others volunteer to run clients to test it? In that use case, is there anything to prevent me from uploading a malicious test suite that in turn gets executed by well intending volunteers?

John Ryding (March 25, 2009 at 12:29 am)

Were you inspired by the recent work of distributed computing with clusters in js?

http://hackaday.com/2009/03/03/distributed-computing-in-javascript/

markc (March 25, 2009 at 4:33 am)

How about leveraging the testing swarmbot to also run tests against websites in general? Testing for levels of xHTML and CSS validation with accumulated stats would be interesting, then on to tests for website functionality would also be of interest to those who submitted tests for their particular site. While we are about it, swarmsource the resulting datasets to summarize and present pretty graphs… in color!

@Bryce Ott: anyone caught, and they will be, inserting malicious code would be outed in short order never to be trusted again. It could happen but I would imagine the discovery rate would be so quick that any serious long term effects would be minimal. IMVHO.

kikito (March 25, 2009 at 5:47 am)

Hi John,

I’d like to raise my own concerns regarding safety measures. Like Bryce suggests, it is conceivable that the javascript tests are used for malicious purposes; and I don’t agree that these scripts are necesarily easy to identify. A test could be used to send user information to a server url, for example.

I think this deserves some thought. I’m thinking about cookies (only those created by the tests themselves should be allowed) or opened windows (only those created by the tests should be accesible). I hope this makes sense; I’m not an expert on javascript intrusion.

Thomas Broyer (March 25, 2009 at 8:48 am)

John, the XHTML Transitional DOCTYPE used in http://jquery.com/test/ (which you qualifies as “correct doctype”) triggers “almost standards mode”, not “standards mode”, in most browsers.

You actually need 3 versions of each test suite (for text/html, plus a 4th one if you really do want to support application/xhtml+xml) when there might be differences (i.e. positionning, when there’s a table containing images).

And in the cases where you do not have to check against “almost standards mode”, you should stick to “standards mode” instead of “almost standards mode” as jQuery’s actually doing (this one rule is just a matter of taste).

See http://hsivonen.iki.fi/doctype/#handling

Babu Maddhuri (March 25, 2009 at 10:09 am)

Looks like there is some light at the end of the tunnel for mobile browsers with Test Swarm.

Looking forward to helping alpha test!

Americo Savinon (March 25, 2009 at 12:25 pm)

Great Idea, like Mathias said… this is like SETI@home but even better.

Estas Cabron John!

(You are the man in English)

PlanBForOpenOffice (March 25, 2009 at 1:35 pm)

Talk to Google how to embed advertisement into the test pages. If the testers click you and Google make money ;-) Or may be better don’t talk to Google, they would consider that click fraud.

But advertisement could support the projects, may be through banner like adds for branding. The same could be said for inner corporate tests if you replace the banner add with the motivational quote of the month or simply internal ads.

Marc Guillemot (March 26, 2009 at 4:46 am)

Interesting idea.

Nevertheless I see an area that will be non trivial: result analysis. While running tests on a large number of uncontrolled browsers, it is quite sure that some false negatives will be reported.

mjl69 (April 4, 2009 at 11:18 pm)

Like seti@home but we have to use our own ears to listen out for the aliens.

Hoffmann (April 6, 2009 at 8:12 pm)

Ever thought about payed beta testers? You could run your test suit against just one browser per commit and hand over the rest to beta testers. They can also test your code for things that can’t be tested by test suites (specially in the non-core part of the library).

You would be given your developers more time while getting better error-handling.

Dean Biron (April 21, 2009 at 1:35 pm)

A few questions you might want to think about:

What is it that you hope to achieve by running so many tests? Do you believe that you will find valuable bugs this way?

How will you process the mass of results, which includes investigating reported failures?

What is the length of your feedback loop? Are you better off running more variants of your unit tests if it takes hours or days to know the results?

These are all typical questions to ask about any software test automation effort.

I would humbly submit that effective testing is achieved by being smart about which tests you run, not through brute force attempts to exhaustively cover a massive configuration matrix. Pairwise testing has already been mentioned. Risk-based testing is another strategy that can help you focus your testing efforts on producing value.

The sort of massive, distributed effort you are proposing, while technically interesting, is only worthwhile if there is sufficient value in the results. Image SETI@home finding a positive result. It would be revolutionary. A failing unit test on an obscure browser configuration, probably not as valuable.

That being said, there may well be significant value in the technology you have built if it is used in the right context.