This summer I had the opportunity to attend NodeConf and it was a fantastic experience. I really appreciated how every session was a hands-on coding session: I felt like I walked away knowing how to put a bunch of advice directly into practice.

One of my favorite sessions was the one run by James Halliday and Max Ogden exploring Node streams. Specifically they sat us down and had us run through the amazing Stream Adventure program. It’s an interactive series of exercises that are designed to help you understand streams better. Seeing interesting ways of using streams in practice was quite eye opening for me. (I also recommend checking out the awesome Stream Handbook if streams are totally foreign to you)

I had made limited use of streams before (namely with the request and fs modules) but I was still early on. Over the past few months I’ve spent more time researching Node modules that make good use of streams and have tried to apply them in my various side projects. In theory I absolutely love streams: they are the chainable piping amazingness that exists with UNIX and I aspired to achieve with jQuery. In practice many Node stream modules are poorly written, poorly documented, out of date, or just obscure. So while theoretically using Node streams should be really easy in practice it becomes quite challenging.

In my explorations I was starting to build up a pile of streaming Node modules that I *knew* worked correctly (or, at least, as I expected them to). Namely: They supported streams in Node 0.10+ and they supported being .pipe()‘d to and from (where applicable). I realized that in a perfect world Node streams truly are like Lego bricks – it becomes quite easy to snap a few of them together to build a chained flow for your data to pass through.

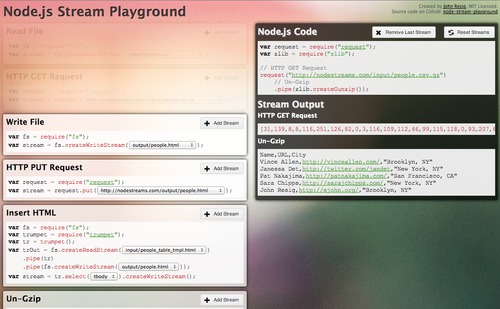

With that in mind I built an exploration that I hope will help others to understand the simplicity and power of Node streams: The Node.js Stream Playground.

More information:

- http://nodestreams.com/

- https://github.com/jeresig/node-stream-playground

- https://npmjs.org/package/stream-playground

The Node.js Stream Playground was created to help Node.js developers better understand how streams work by showing a number of use cases that are easily plug-and-play-able. Additionally detailed logging is provided at every step to help users better understand what events the streams are emitting and exactly what their contents are.

How to Use the Playground

I hope you’ll get the most out of the playground by exploring concepts for yourself – looking at what happens when you pipe different streams to each other, looking at the data being logged, and attempting to understand (for yourself) what exactly is happening and why. Note that all of the code being generated is valid Node code and can be copy-and-pasted and run on your own computer (assuming you have the appropriate NPM modules installed).

I should note that this isn’t the end-all-and-be-al of Node stream education: There is still a lot to learn with regard to error handling, back pressure, and all the intricate stream concepts that exist. I hope to write some more on these concepts some day.

With that being said here are some good examples of some of the actions that you can do:

Copying a File

- Select Read File and

input/people.json. - Select Write File and

output/people.json.

The resulting code:

var fs = require("fs");

// Read File

fs.createReadStream("input/people.json")

// Write File

.pipe(fs.createWriteStream("output/people.json"));

This will copy the JSON file from one location to another. This is actually the preferred way of copying files in Node – there actually is no built-in utility method for doing this.

Downloading a File

This will download the JSON file from the specified URL and save it to the local file.

- Select HTTP Get Request and

http://nodestreams.com/input/people.json. - Select Write File and

output/people.json.

The resulting code:

var request = require("request");

var fs = require("fs");

// HTTP GET Request

request("http://nodestreams.com/input/people.json")

// Write File

.pipe(fs.createWriteStream("output/people.json"));

Un-Gzipping a File

- Select Read File and

input/people.csv.gz. - Select Un-Gzip.

- Select Write File and

output/people.csv.

The resulting code:

var fs = require("fs");

var zlib = require("zlib");

// Read File

fs.createReadStream("input/people.csv.gz")

// Un-Gzip

.pipe(zlib.createGunzip())

// Write File

.pipe(fs.createWriteStream("output/people.csv"));

Note that the initial data that is coming out of the Read File is just an array of numbers – this is to be expected. In reality it’s a Node Buffer holding all of the raw binary data. It’s not until after we Un-Gzip the file that we start to have data that’s in a more usable form.

Converting a TSV to HTML

In this case we’re going to manually parse the TSV (without headers) just to show you some of the string operations provided by event-stream.

- Select Read File and

input/people.tsv. - Select Split Strings.

- Select Split Strings into Array.

- Select Convert Array w/ Sprintf (with the default table row HTML).

- Select Join Strings (this will insert an endline between all the individual table rows).

- Select Concat Strings (this will combine all the individual table rows and endlines into a single string).

- Select Wrap Strings (with the default table HTML).

- Select Write File and

output/people.html.

The resulting code:

var fs = require("fs");

var es = require("event-stream");

var vsprintf = require("sprintf").vsprintf;

// Read File

fs.createReadStream("input/people.tsv")

// Split Strings

.pipe(es.split("\n"))

// Split Strings into Array

.pipe(es.mapSync(function(data) {

return data.split("\t");

}))

// Convert Array w/ Sprintf

.pipe(es.mapSync(function(data) {

return vsprintf("<tr><td><a href='%2$s'>%1$s</a></td><td>%3$s</td></tr>", data);

}))

// Join Strings

.pipe(es.join("\n"))

// Concat Strings

.pipe(es.wait())

// Wrap Strings

.pipe(es.mapSync(function(data) {

return "<table><tr><th>Name</th><th>City</th></tr>\n" + data + "\n</table>";

}));

This should give you some HTML that looks something like this:

<table><tr><th>Name</th><th>City</th></tr> <tr><td><a href='http://vinceallen.com/'>Vince Allen</a></td><td>Brooklyn, NY</td></tr> <tr><td><a href='http://twitter.com/jandet'>Janessa Det</a></td><td>New York, NY</td></tr> <tr><td><a href='http://patnakajima.com/'>Pat Nakajima</a></td><td>San Francisco, CA</td></tr> <tr><td><a href='http://sarajchipps.com/'>Sara Chipps</a></td><td>New York, NY</td></tr> <tr><td><a href='https://johnresig.com/'>John Resig</a></td><td>Brooklyn, NY</td></tr> </table>

Download Encoded, Gzipped, CSV and Convert to HTML

This is the big one:

- Select HTTP Get Request and

http://nodestreams.com/input/people.csv.gz. - Select Un-Gzip (note that some of the characters are encoded incorrectly, we need to fix this!).

- Select Change Encoding (even though it comes out as a buffer, this is correct, as we’ll see in a moment).

- Select Parse CSV as Object.

- Select Convert Object w/ Handlebars (with the default table row HTML).

- Select Join Strings (this will insert an endline between all the individual table rows).

- Select Concat Strings (this will combine all the individual table rows and endlines into a single string).

- Select Wrap Strings (with the default table HTML).

- Select HTTP PUT Request and

http://nodestreams.com/output/people.html.

The resulting code:

var request = require("request");

var zlib = require("zlib");

var Iconv = require("iconv").Iconv;

var csv = require("csv-streamify");

var Handlebars = require("handlebars");

var es = require("event-stream");

var tmpl = Handlebars.compile("<tr><td><a href='{{URL}}'>{{Name}}</a></td><td>{{City}}</td></tr>");

// HTTP GET Request

request("http://nodestreams.com/input/people_euc-jp.csv.gz")

// Un-Gzip

.pipe(zlib.createGunzip())

// Change Encoding

.pipe(new Iconv("EUC-JP", "UTF-8"))

// Parse CSV as Object

.pipe(csv({objectMode: true, columns: true}))

// Convert Object w/ Handlebars

.pipe(es.mapSync(tmpl))

// Join Strings

.pipe(es.join("\n"))

// Concat Strings

.pipe(es.wait())

// Wrap Strings

.pipe(es.mapSync(function(data) {

return "<table><tr><th>Name</th><th>City</th></tr>\n" + data + "\n</table>";

}))

// HTTP PUT Request

.pipe(request.put("http://nodestreams.com/output/people.html"));

You should have the same output as the last run. A lot is happening here but even will all of these steps Node streams still makes it relatively easy to complete it all. It’s at this point that you can truly start to see the power and expressiveness of streams.

Adding in New Streams

If you’re interested in extending the playground and adding in new pluggable stream “blocks” you can simply edit blocks.js and add in the stream functions. A common stream block would look something like this:

"Change Encoding": function(from /* EUC-JP */, to /* UTF-8 */) {

var Iconv = require("iconv").Iconv;

return new Iconv(from, to);

},

The property name is the full title/description of the stream. The arguments to the function are variables that you wish the user to populate. The comments immediately following the argument names are the default values (you can provide multiple values by separating them with a |).

The streams are split into 3 types: “Readable”, “Writable”, “Transform” (in that they read content, modify it, and pass it through). Typically it is assumed that “Readable” streams will be the first ones that you can choose in the playground, “Writeable” streams will end the playground, and everything else is just “.pipe()able”.

If you’ve added a new stream please send a pull request and I’ll be happy to add it!

Running Your Own Server

After downloading the code (either from Github or NPM). Be sure to run npm install to install all the dependencies. You can then use just node app.js to run a server – or if you wish to run something more robust you can install naught and then run npm start.

WARNING I have no idea how robust the application’s security is. This application is generating and executing code on the user’s behalf (although it is not allowing arbitrary code to be executed). Feel free to run it on a local server or, if you feel confident in the code, run it on your own server. At the moment I’m running it on a standalone server with nothing else on it, just in case.

Feedback Welcome!

I’d love to hear about how people are using streams and if this tool has been helpful for your understanding how streams work. Let me know if there are particular stream modules that you really like and – if possible – try and add them to the stream playground so that others can experience them as well!

Abderrahmane TAHRI JOUTI (November 15, 2013 at 1:24 pm)

You see, I can compare my emotions that I felt while reading the pipe().pipe().pipe() bit to the first time I read a Harry Potter book. Just Brilliant.

Benjamin Goering (November 15, 2013 at 4:22 pm)

Those who enjoyed this and streams2+ in general may find useful this library we’ve made to use objectMode streams in the browser. http://github.com/Livefyre/stream There are quite a few tests if you want to get a feel for how streams work: https://github.com/Livefyre/stream/blob/master/tests/spec/re…

For a concrete implementation, check out our SDK. We use streams to abstract away the details of paging out Content from our APIs. There is an example in the README. https://github.com/Livefyre/streamhub-sdk

These streams power the bottom 5 apps you see here: http://apps.livefyre.com/

Henry Allen-Tilford (November 15, 2013 at 4:48 pm)

Re: Running on your own server

I made a runnable version to play around with http://runnable.com/UoaTHZMTsIZBAAHG/node-js-stream-playground-for-streams

Chase Adams (November 15, 2013 at 5:29 pm)

Great writeup John!

I have to agree that one of the best sessions I’ve ever had the chance to be in was the Streams Adventures session. It was a really great way to get people up and running and working on their own, and being available if you got stuck.

Thanks for sharing this awesome tool, and it’s always cool to see people walk away from a learning experience and create another learning experience.

Liam Morley (November 15, 2013 at 10:17 pm)

Fantastic. This reminds me of Yahoo! Pipes; it’d be interesting to see how easy it might be to re-implement Yahoo! Pipes with node streams.

gnumanth (November 16, 2013 at 8:10 pm)

I remember your tweet about the same https://twitter.com/jeresig/status/400767466286379008 and a week later this comes out! A very intuitive way to learn streams!

It also worth mentioning

npm install stream-adventureAlex Ford (November 20, 2013 at 1:18 pm)

This is great! Thank you very much for this.

Green Playstations (November 27, 2013 at 5:20 am)

This Article is very Great and useful!!