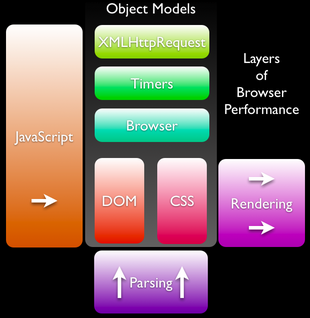

Something that’s frequently befuddled is the differentiation between where JavaScript is executing and where performance hits are taking place. The difficulty is related to the fact that many aspects of a browser engine are reliant upon many others causing their performance issues to be constantly intertwined. To attempt to explain this particular inter-relationship I’ve created a simplified diagram:

To break it down, there’s a couple key areas:

- JavaScript – This represents the core JavaScript engine. This contains only the most basic primitives (functions, objects, array, regular expression, etc.) for performing operations. Unto itself it isn’t terribly useful. Speed improvements here have the ability to affect all the various object models.

- Object Models – Collectively these are the objects introduced into the JavaScript runtime which give the user something to work with. These objects are generally implemented in C++ and are imported into the JavaScript environment (for example XPCOM is frequently used by Mozilla to achieve this). There are numerous security checks in place to prevent malicious script from accessing these objects in unintended ways (which produces an unfortunate performance hit). Speed improvements generally come in the way of improving the connecting layer or from removing the connecting layer altogether.

- XMLHttpRequest and Timers – These are implemented in C++ and introduced into the JavaScript engine at runtime. The performance of these elements only indirectly affect rendering performance.

- Browser – This represents objects like ‘window’, ‘window.location’, and the like. Improvements here also indirectly affect rendering performance.

- DOM and CSS – These are the object representations of the site’s HTML and CSS. When creating a web application everything will have to pass through these representations. Improving the performance of the DOM will affect how quickly rendering changes can propagate.

- Parsing – This is the process of reading, analyzing, and converting HTML, CSS, XML, etc. into their native object models. Improvements in speed can affect the load time of a page (with the initial creation of the page’s contents).

- Rendering – The final painting of the page (or any subsequent updates). This is the final bottleneck for the performance of interactive applications.

What’s interesting about all of this is that a lot of attention is being paid to the performance of a single layer within the browser: JavaScript. The reality is that the situation is much more complicated. For starters, improving the performance of JavaScript has the potential to drastically improve the overall performance of a site. However there still remain bottlenecks at the DOM, CSS, and rendering layers. Having a slow DOM representation will do little to show off the improved JavaScript performance. For this reason when optimization is done it’s frequently handled throughout the entire browser stack.

Now what’s also interesting is that the analysis of JavaScript performance can, also, be affected by any of these layers. Here are some interesting issues that arise:

- JavaScript performance outside of a browser (in a shell) is drastically faster than inside of it. The overhead of the object models and their associated security checks is enough to make a noticeable difference.

- An improperly coded JavaScript performance test could be affected by a change to the rendering engine. If the test were analyzing the total runtime of a script a degree of accidental rendering overhead could be introduced as well. Care needs to be taken to factor this out.

So while the improvement of JavaScript performance is certainly a critical step for browser vendors to take (as much of the rest of the browser depends upon it) it is only the beginning. Improving the speed of the full browser stack is inevitable.

Dean Edwards (February 28, 2008 at 4:29 am)

Firefox really sucks for rendering speed. What’s with that?

Sean Hogan (February 28, 2008 at 5:06 am)

OTOH, Javascript is the only bit most of us can do anything about, so it’s natural that it will get more visibility. Hammer, nail, blahblahblah.

m0n5t3r (February 28, 2008 at 6:08 am)

@dean: Firefox 3 has improved the rendering speed a lot; I am currently working on a web interface for a product, and it involves lots of drag and drop, and the difference between FF2 and FF3b3 is staggering. That being said, the new Yahoo mail interface still moves like crap, so a fast browser can’t help bad code too much :D

@john: I tend to disagree with you about shell vs browser javascript speed differences; I did a while back a little stress test for the jQuery.sprintf plugin that I wrote, and consistently achieved better times in Firefox 2 than in spidermonkey. But then again, I only benchmarked String.replace(regex, callback) with this :)

Dig (February 28, 2008 at 9:32 am)

Yeah. I noticed that while FF3 is apparently beating out Kestrel in SunSpider, Kestrel still destroys everyone on the tests they linked to at:

http://nontroppo.org/timer/kestrel_tests/

Most of those tests are really really DOM intensive though, requiring hundreds to thousands of DOM nodes to be created and changed simultaneously. I don’t know how realistic that is for the real world, but FF’s speed in the DOM doesn’t seem improved much this release (probably why most of the tXUL performance bugs for FF3 seemed focused on extra removing DOM too).

h3 (February 28, 2008 at 10:47 am)

@dean

Yeah .. and with firebug installed it almost revives old dialup memories *sigh*

Have you tried Epiphany ? It’s the gecko engine too but the speed is really not comparable, I always wondered why..

And yes it’s really improved in FF3 :)

Tom (February 28, 2008 at 11:41 am)

To me, the point of fast JavaScript is the ability to write actual algorithms in JavaScript rather than trying to hack things down to the native layer. Fast, portable, secure code execution opens up worlds of possibilities.

That said, I understand that all the layers matter. CSS and layout (especially dynamic) are intensive beasts.

Brendan Eich (February 28, 2008 at 12:59 pm)

Any big difference in core JS performance between the SpiderMonkey js shell and a browser embedding of SpiderMonkey is (IMHO) a bug to fix. We are monitoring the delta and driving it to zero.

/be

pd (February 29, 2008 at 8:38 am)

The headline for this post might as well be “Passing the buck: JS is not slow, Gecko’s DOM and CSS is the bottleneck”.

To the end user, they don’t care.

To the developer who writes a bit of JS and has very little control over performance (cannot set memory usage – even if we wanted to – poor access to perf testing tools) doesn’t care if it’s JS or DOM or CSS either! Essentially they are all the same thing as JS manipulates DOM and CSS to actually achieve anything.

I’m not sure really what the point of this article is. John are you do some expectation management in that you are trying to ensure people don’t expect mind-blowing perf improvments from JS improvements? Are you trying to ensure focus on perf continues beyond any JS perf improvments?

pd (February 29, 2008 at 8:41 am)

hmm, I wish I saw Brendan’s comment before I wrote mine (page was cached). That’s all that needs to be said Brendan. Thanks for saying that as it makes me feel better at least :)

Julien Huang (February 29, 2008 at 7:42 pm)

Nothing to do, but what soft did you used to make the diagram ?

PS: congratulations for this wonderful tool that’s jQuery, it’s certainly the most intuitive & fluent lib that I have ever used.