A new JavaScript Engine has hit the pavement running: The new V8 engine (powering the brand-new Google Chrome browser).

There are now a ton of JavaScript engines on the market (even when you only look at the ones being actively used in browsers):

- JavaScriptCore: The engine that powers Safari/WebKit (up until Safari 3.1).

- SquirrelFish: The engine used by Safari 4.0. Note: The latest WebKit nightly for Windows crashes on Dromaeo, so it’s passed for now.

- V8: The engine used by Google Chrome.

- SpiderMonkey: The engine that powers Firefox (up to, and including, Firefox 3.0).

- TraceMonkey: The engine that will power Firefox 3.1 and newer (currently in nightlies, but disabled by default).

- Futhark: The engine used in Opera 9.5 and newer.

- IE JScript: The engine that powers Internet Explorer.

There have, already, been a number of performance tests run on the above browsers – and a few of those runs have also included the new Chrome browser. It’s important to look at these numbers and try and gain some perspective on what the tests are testing and how those numbers relate to actual web page performance.

We have three test suites that we’re going to look at:

- SunSpider: The popular JavaScript performance test suite released by the WebKit team. Tests only the performance of the JavaScript engine (no rendering or DOM manipulation). Has a wide variety of tests (objects, function calls, math, recursion, etc.)

- V8 Benchmark: A benchmark built by the V8 team, only tests JavaScript performance – with a heavy emphasis on testing the performance of recursion.

- Dromaeo: A test suite built by Mozilla, tests JavaScript, DOM, and JavaScript Library performance. Has a wide variety of tests, with the majority of time spent analyzing DOM and JavaScript library performance.

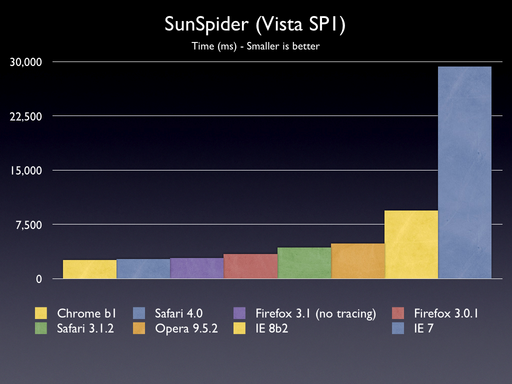

SunSpider

Let’s start by taking a look at some results from WebKit’s SunSpider test (which covers a wide selection of pure-JavaScript functionality). Here is the break down:

We see a fairly steady curve, heading down to Chrome (ignoring the Internet Explorer outliers). Chrome is definitely the fastest in these results – although the results from the new TraceMonkey engine aren’t included.

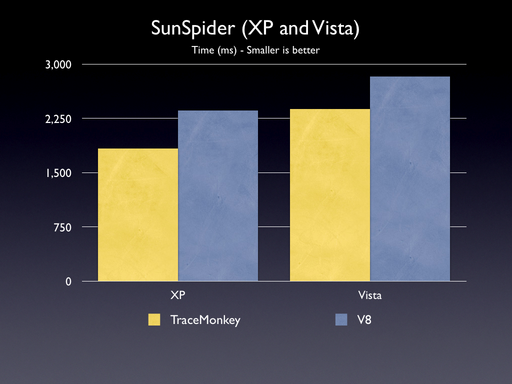

Brendan Eich pulled together a comparison, last night, of the latest TraceMonkey code against V8.

We already see TraceMonkey (under development for about 2 months) performing better than V8 (under development for about 2 years).

The biggest thing holding TraceMonkey back, at this point, is its recursion tracing. As of this moment no tracing is done across recursive calls (which puts TraceMonkey as being about 10x slower than V8 at recursion). Once recursion tracing lands for Firefox 3.1 I’ll be sure to revisit the above results.

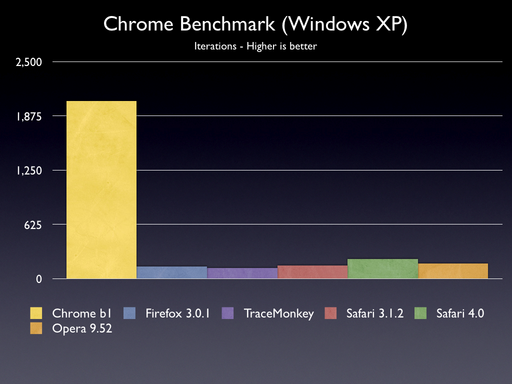

Google Chrome Benchmark

The Chrome team released their own benchmark for analyzing JavaScript performance. This includes a few new tests (different from the SunSpider ones) and is very recursion-heavy:

We can see Chrome decimating that other browsers on these tests. Its debatable as to how representative these tests are of real browser performance, considering the hyper-specific focus on minute features within JavaScript.

Note TraceMonkey performing poorly: It’s unable to benefit from any of the tracing due to the lack of recursion tracing (as explained above).

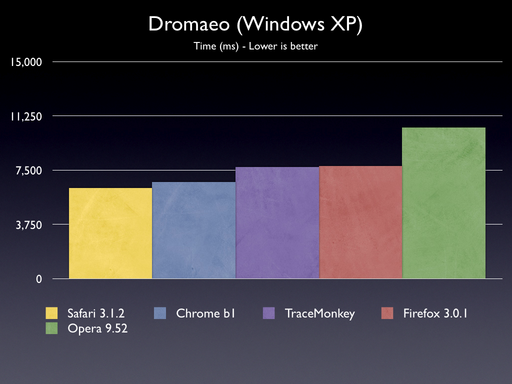

Dromaeo with DOM

Finally, let’s take a more holistic look at JavaScript performance. I’ve been working on the Dromaeo test suite, adding in a ton of new DOM and JavaScript library tests. This assortment provides a much stronger look at how browsers might perform under a normal web browsing situation.

Considering that most web pages are being held back by the performance of the DOM (think table sorters and the like) and not, necessarily, the performance of JavaScript (games, graphics) it’s important to look at these particular details for extended analysis.

The results of a run against the JavaScript, DOM, and library tests (thanks to Asa Dotzler for helping me run the tests):

(No results for IE were provided as the browser crashes when running the tests, unfortunately – also I had trouble getting the WebKit nightlies, with Squirrelfish, to run on Windows, see bug 20626.)

We see a very different picture here. WebKit-based engines are absolutely ahead – but Chrome is lagging behind the latest release of Safari. And while there is a small speed improvement while using TraceMonkey, over regular Firefox, the full potential won’t be unlocked until tracing can be performed over DOM structures (which it is currently incapable of – may not be ready until Firefox 3.2 or so).

One thing is clear, though: The game of JavaScript Performance leapfrog is continuing. With another JavaScript engine in the mix that rapid iteration will only have to increase – which is simply fantastic for end users and application developers.

Update: I’ve posted results for Safari 4.0 wherever I could.

Stefan Hayden (September 3, 2008 at 10:06 am)

it’s sad to see google only feature speed tests that show such a skewed view.

Rizqi Ahmad (September 3, 2008 at 10:07 am)

Very impressive, but I still don’t really understand why DOM still slow. When do you think will DOM at least as fast as innerHTML?

zafer gurel (September 3, 2008 at 10:09 am)

V8 engine is not as fast as Google says, which shows that it’s more a marketing effort than telling the truth.

Joshua Gertzen (September 3, 2008 at 10:10 am)

Thanks for running this range of tests. We were unable to run the full gambit of tests that you did, but our testing with Dromaeo demonstrated VERY very different results. Our results, as well as many results we saw floating around yesterday, indicate that V8 is at least 3 times faster than Firefox 3.0.1 (450ms vs. 1600ms) and around 2 times faster than Safari nightly (450ms vs. 850ms). We didn’t get a chance to test newers Firefox builds.

In any case, given that your times are much slower, I’m curious what kind of hardware you are running your tests on. Is it possible that V8 is leveraging optimizations only available on newer hardware?

David (September 3, 2008 at 10:12 am)

In the first two benchmarks, did Safari have SquirrelFish? The graphs say Safari 3.1.2

M (September 3, 2008 at 10:13 am)

Andreas

John Resig (September 3, 2008 at 10:15 am)

@Joshua Gertzen: It sounds like you’re using the “regular” Dromaeo tests (which only test a subset of the SunSpider tests). The second version that I linked to includes a number of DOM and JavaScript library tests – which drastically normalize the field.

@David: No, those are just the current versions of Safari (no SquirrelFish). I had trouble getting it to run on Windows, if anyone is able to get it going (and provide the other browser results) I’ll be happy to update my results.

StCredZero (September 3, 2008 at 10:17 am)

Zafer,

The marketing claims are inflated. (What, are you surprised?) Still, the results so far are very solid.

Bill Mill (September 3, 2008 at 10:19 am)

Like Joshua, I’ve seen other results on dromeao posted; at http://www.reddit.com/r/programming/comments/6z9op/chrome_is_here/c059qlq several people show much better results for chrome than for firefox.

John Resig (September 3, 2008 at 10:20 am)

@Bill Mill: As with Joshua, be sure to use the second version of the Dromaeo tests.

Bill Mill (September 3, 2008 at 10:21 am)

Ahh… I didn’t know about the secret Dromaeo tests, and you responded while I was typing.

Ben Atkin (September 3, 2008 at 10:26 am)

Interesting. It seems that on a speed basis there’s no big improvement, if any, over the next version of TraceMonkey.

One of the features of V8 touted in the Google Chrome comic book is its aggressive garbage collection. Have you investigated this, to see if there’s any major difference between V8’s garbage collection performance and that of other browsers? In the comic, a reason they give for their garbage collection strategy is preventing a large pause while the garbage collector does its work.

Jay Garcia (September 3, 2008 at 10:40 am)

Dude, this is awesome. Great job John.

Jesse Casman (September 3, 2008 at 10:47 am)

Can I ask a dumb question as a “normal” user of browsers? Do I care about the engine behind a browser? Is it all about speed? And if there’s (several) networks between me and the website I’m viewing, plus RAM space, CPU speed, and whatever else, am I ever going to notice a supposedly faster browser? If there was a browser that could quickly switch between engines would that be helpful?

Browsers News (September 3, 2008 at 10:52 am)

Great post, thanks for your effort :)

Joshua Gertzen (September 3, 2008 at 10:53 am)

Ahh, I see John. That makes more sense… didn’t notice that you linked to a version 2 of the test. In any case, I look forward to seeing what tracing through the DOM structures will do for Firefox. Of course, it’s fair to say that Google’s Chrome team won’t just stop with V8. From what I can gather, they focused on raw JS performance first and at this point they just rely on WebKit’s DOM capabilities, which explains why Safari and Chrome are very close in your Dromaeo v2 test.

Ryan Tenney (September 3, 2008 at 10:54 am)

Is it just me, or has alot of JavaScript seemed exceptionally slow/unresponsive in Chrome? Try playing Old Snakey (in Gmail Labs). I’m using a quad core machine with 4gb of ram, so its inconceivable that my machine isn’t up to the challenge. Also Google Docs takes about twice as long to sort a spreadsheet as it does in Firefox.

Brendan Eich (September 3, 2008 at 11:00 am)

@Ben: Chrome has a nice GC: exact rooting, generational with copying. Single-threaded, too (not an option for SpiderMonkey, which is used in AT&T 1-800-555-1212 and 411 AVR massively multi-threaded services built by tellme.com, now owned by Microsoft!). It definitely helps cut down on pauses and keep memory use flatter.

The Chrome comic book did have one piece of misinformation, though: it said other browsers’ engines use conservative GC, and have false positive problems because they can’t distinguish random integers from pointers into the heap. This is not true of Firefox, IE, or Opera.

/be

Andrew (September 3, 2008 at 11:02 am)

I ran the V8 benchmarks against the latest nightly build of JavaScriptCore which uses the SquirrelFish JIT and is what’s used in Safari and WebKit. I tested JSCore using its jsc shell (not sure many people know about it yet), and the numbers for V8 where pretty impressive:

JavaScriptCore – 457

V8 – 2127

http://www.tangerinesmash.com/writings/2008/sep/02/v8-and-javascriptcore-compared/

These were run on 10.5 and using the latest available WebKit build – as of last night.

David Christian Liedle (September 3, 2008 at 11:06 am)

Hey John, thanks for posting these benchmarks!

I’m very curious as to the relative CPU and RAM usage expended during the execution of these tests. You wouldn’t happen to have glanced at those, would you?

D:.

John Resig (September 3, 2008 at 11:12 am)

@Jesse Casman: The engine behind doesn’t really matter, to the end user, as long as it’s one that’ll allow them to perform their everyday tasks to the best of their abilities. If a faster engine provides that, then so be it.

@David Christian Liedle: Unfortunately I didn’t track those numbers while running the tests. Although, I’m sure they all exhibited CPU usage close to 100% (I’d be disappointed if it gave any less, honestly).

tc (September 3, 2008 at 11:13 am)

“We already see TraceMonkey (under development for about 2 months) performing better than V8 (under development for about 2 years).”

Meh. Tracemonkey sounds like basically an extension to Spidermonkey, which has been in development for years. V8 sounds like a new engine basically from scratch. Wouldn’t it be more accurate to say “Tracemonkey (under dev for 10+ years) performing better than V8 (under dev for about 2 years)”?

Nosredna (September 3, 2008 at 12:00 pm)

Chrome certainly is exciting, because it has a crazy-fast JavaScript in a shipping browser.

So when will Mozilla release its hounds? Can I please have a faster JS next time I get a Firefox update? Or are there stability, QA, and testing issues? Are there known pages that fail under TraceMonkey?

Boris (September 3, 2008 at 12:58 pm)

@Rizqi Ahmad: The reason the DOM tests are slower right now is that TM doesn’t JIT JS that calls into the DOM. So as soon as you make a DOM call, you fall back on the interpreter. This situation is not planned to persist for long.

@Nosredna: the faster JS is in any trunk Firefox build, but the pref is set to “false” by default. Search for “jit” in about:config and flip the pref. And yes, some things will probably fail and/or crash.

Nanomedicine (September 3, 2008 at 12:59 pm)

Great article! No doubt that Google’s done a great job by releasing Chrome. It’s gonna rock the browser market if nothing else.

Mike

Peter Rust (September 3, 2008 at 1:04 pm)

@tc: TraceMonkey is actually code contributed to SpiderMonkey from Tamarin, the ECMAScript engine Adobe donated to Mozilla. I’m not sure how long Adobe’s had a JIT-ing script engine.

@Nosredna: FF3.1 should release near the end of the year with TraceMonkey.

@Rizqi: I think the DOM is slower because traditionally (in IE, at least) the DOM is in a different “universe” than the Javascript objects. There’s some kind of COM call or other gymnastics that go on with every DOM property/method access, so if you can reduce the number of calls (say to a single .innerHTML), you drastically reduce the time.

I *think* (and I could be wrong here) this is one of the keys to Sizzle (http://ajaxian.com/archives/sizzle-john-resig-has-a-new-selector-engine). He only walks the DOM in one limited case. Everywhere else, he’s grabbing whole sets of elements at a time (getElementsByTagName, getElementsByClassName), thus making the browser do as much work as possible with as few JS-DOM interactions as possible.

Nosredna (September 3, 2008 at 1:11 pm)

>>the faster JS is in any trunk Firefox build, but the pref is set to “false” by default. Search for “jit” in about:config and flip the pref. And yes, some things will probably fail and/or crash.

>>FF3.1 should release near the end of the year with TraceMonkey.

That’s great. Of course, I’m not worried about me having the fast JS. I want visitors to my site to have it. That’s why I think Google’s move is so great. My site (in private beta) is twice as fast in Chrome as in public releases of Firefox 3.

Oliver Kiss (September 3, 2008 at 1:29 pm)

Great to see all these changes making browsing the internet a more pleasurable experience!

Maciej Stachowiak (September 3, 2008 at 1:33 pm)

John, we’ve done profiling of various engines running the V8 team’s tests, as well as studying the test content. The two things they focus on most heavily are property access and function /method calls. These are also the two things the V8 team focused most on speeding up. It is fair to say that this somewhat represents very “object oriented” JavaScript code, but somehow their benchmark does not at all reflect TraceMonkey’s advantage of V8 on code that does straight-up computation.

SunSpider, instead of focusing on a few language features and a single style of code, tried cover as wide a variety of language features as possible. So if you look at the subtest breakdown, you’ll see that V8 is better at some things and TraceMonkey is better than others. I think that represents the actual state of the engines more accurately.

Ken Cox (September 3, 2008 at 1:49 pm)

Thanks for the great post. This is very valuable information coming from an independent JavaScript expert.

If a developer bases an application on Jquery’s library, which browser would you recommend to end-users? It sounds like Chrome would win.

Ken

idunno (September 3, 2008 at 2:34 pm)

I am in love with Google Chrome. I hope mozilla take the JS out of it so we can have fast speeds + extensions.

AndersH (September 3, 2008 at 3:30 pm)

> the full potential [of TraceMonkey] won’t be unlocked until tracing can be performed over DOM structures (which it is currently incapable of – may not be ready until Firefox 3.2 or so).

“we currently don’t compile across [DOM] calls (we plan to for Beta2 though).” — from http://andreasgal.com/2008/09/03/tracemonkey-vs-v8/#comment-95

mpt (September 3, 2008 at 3:44 pm)

“A fairly steady curve”? There’s no curve in that graph. You’re comparing different browsers, so the X axis is not continuous.

(It would make sense to talk about a curve if you were, for example, testing performance of daily builds of the same browser over time, or performance of the same browser with different amounts of memory.)

Dj Gilcrease (September 3, 2008 at 4:09 pm)

The SunSpider results by Brendan Eich seem extremely off compared to what I am seeing with v8. I have yet to produce results above 2000ms with an average of about 1995ms on SunSpider. I am running VistaPro SP1, and according to the graph it appears as if his results on Vista are closer to 2800ms.

Your Dromaeo with DOM tests are much closer to what I am seeing with v8 (http://v2.dromaeo.com/?id=32873), though it is interesting to note that almost all of the individual tests that are extremely slow (400ms or more) are jQuery tests, specifically;

jQuery – prevAll: 562.40ms ±1.27%

jQuery – nextAll: 562.60ms ±2.88%

jQuery – siblings: 1001.00ms ±2.04%

The siblings test really raised my eyebrows cosidering the same test with prototype was less then 100ms

Prototype – siblings: 89.80ms ±4.93%

So why is jQuery’s siblings implementation so much slower then prototype’s?

Also the nextAll and prevAll I suspect are so much slower (2x) because Chrome does not support those natively, so it if falling back to a pure js implementation in jQuery

My SunSpider results

Stev (September 3, 2008 at 4:11 pm)

Strange, as Opera seems the fastest still, in REAL WORLD applications, which makes me wonder wether these synthetic benchmarks released by all the browser makers are best suited to their own browser.

Name (September 3, 2008 at 4:17 pm)

Just goes to show that WebKit really focuses on the pain points — profile first, optimize later, anyone?

Brendan Eich (September 3, 2008 at 4:37 pm)

@Dj: what Firefox 3.1 alpha build are you running? You have javascript.options.jit.content set to true, right?

My blog linked directly to the publicly available automated Firefox build that we tested. If you try that build with the jit configured on, and do not get results commensurate with mine (slightly beating V8), then please mail me.

/be

Brendan Eich (September 3, 2008 at 4:42 pm)

@Peter: TraceMonkey uses the Tamarin Tracing Nanojit, but the tracer and interpreter hookup, and the x86-64 back end, are all original from Mozilla, thanks to Andreas Gal, Mike Shaver, David Anderson, Blake Kaplan, and myself. We love the Nanojit, and it was a huge boost to our effort, but it is not nearly the whole of TraceMonkey — it’s the near-machine-level IR, trace fragment bookkeeping, and code-gen layer.

As others have observed, Adobe faces a typed-code workload with their AS3 dialect of JS, so they have not optimized untyped code as we have in SpiderMonkey. In many ways this makes TraceMonkey the best-of merger between SpiderMonkey and Tamarin code.

/be

Matt (September 3, 2008 at 4:43 pm)

I wonder if V8’s optimization for recursion-heavy code has anything to do with Google’s future application offerings? Right now it seems like a small subset of the things a Javascript engine should do well, but if Google plans to stretch capabilities in that area in the future — this would be an effective way to promote a technology that they want to see adopted for use with future applications.

kTzAR (September 3, 2008 at 4:46 pm)

While no significant improvement (4x, 6x, 8x) seem to be present between browsers by the tests the comments say (taking apart IE), I think that the point here is: if Google Chrome success in lowering the IE using stats we will be able to smile.

I think it’s fantastic that a bunch of standards-following browsers keep improving their core code. And if Google takes part in the “game” it may be true that this is the end of the Internet Explorer hegemony.

By the way, good work John.

Dj Gilcrease (September 3, 2008 at 5:23 pm)

@Brendan Eich I cant actually get any version of FF3.1 to run the SunSpider test without crashing instantly when jit is turned on, I tested all of them from today.

http://crash-stats.mozilla.com/report/index/94aeda97-7a06-11dd-9c3c-001a4bd43ed6?p=1

Nosredna (September 3, 2008 at 5:26 pm)

As for “real world,” I have an app that is doing this:

1) JavaScript computations–financial and statistical. Real work, not make-believe browser testing work

2) Canvas updating (severely slow on MSIE via excanvas. So slow that I only show every 4th frame on IE–hate, hate, hate)

3) Progress bar updating

4) Small animated gif

5) On-the fly DOM manipulation with jQuery

This is a REAL WORLD APP, not a browser test.

IE8b2(8.0.6001.18241) 108 seconds (hate, hate, hate)

Opera9.52(10108) 53 seconds

FF3.01 48 seconds

Safari4DeveloperPreview(526.12.2) 43 seconds

Chrome0.2.149.27 24 seconds

All apps are different, but this is a real one.

IE8 MUST get native Canvas or I will declare IE8 to be the Official King of Suck.

tc (September 3, 2008 at 5:37 pm)

Peter: Right, that’s what I thought I said, where “extension” = code from Tamarin (I can’t keep up with all these silly codenames).

It’s disingenuous to compare the dev time of “adding tracing optimizations to an existing JS engine” to “a whole new JS engine”.

Peter Rust (September 3, 2008 at 6:34 pm)

@Brendan Eich: Thanks for clarifying, I didn’t realize there was so much code (and work) between the Tamarin Tracing Nanojit and the pre-existing SpiderMonkey implementation.

@tc: You’re right, building the whole thing from scratch is probably another ball game.

Regardless, building a JIT machine-code generator — and one that generates optimized code at that — is far beyond my own abilities.

My hat is off to both teams: they’ve made the open web a more attractive platform and eased the pain of the next generation of dynamic-language developers (the effects will reverberate into Python, Ruby, etc).

Poulejapon (September 3, 2008 at 7:46 pm)

I observed a >1000%+ improvement in performance between Firefox 3.0 (This is not Tamarin though) and Google Chrome when running an asymmetric encryption library.

ben (September 3, 2008 at 8:17 pm)

Google chrome kicks everyones butt. Get over it.

I saw a javascript game yesterday that had to LIMIT the framerate because google chrome made it unplayable.

And, as if the google team aren’t going to implement tracing – embrace and extend (oops I mean standing on the shoulders of giants). Resistance is futile.

David Smith (September 3, 2008 at 8:33 pm)

ben: uh, actually that’s probably just because Chrome has a different behavior in setTimeout from everyone else. It was being argued about in #chromium yesterday.

(specifically, other browsers limit timers to 10ms resolution to avoid having some webapps hog cpu; chrome does 1ms resolution)

orlando_ombzzz (September 3, 2008 at 8:35 pm)

john

could you provide test result for Linux? please don’t forget us ( we are a growing minority :-)

Boris (September 3, 2008 at 8:55 pm)

@ben, V8 already has its own JIT. So implementing another JIT won’t really change their results much.

Brendan Eich (September 3, 2008 at 11:21 pm)

@Dj: Thanks very much — that’s a new bug, and although the breakpad report lacks symbols for some reason, I think we can figure it out and fix it. Filed as https://bugzilla.mozilla.org/show_bug.cgi?id=453580.

/be

Jimmy (September 3, 2008 at 11:42 pm)

@John: Tests on pure DOM selector show that Chrome got the worst performance. So, benchmark results can be varied depending how they are implemented.

http://javascriptly.com/2008/09/javascript-in-google-chrome/

Nosredna (September 4, 2008 at 1:02 am)

@Jimmy, but looking at the details there, Chrome is virtually tied for the lead in jQuery selector performance.

So it really depends on which library you’re going to use.

Of course, that’s only selectors. I’ve done lots of profiles and it’s rare that selectors are bottlenecks, isn’t it?

Question (September 4, 2008 at 1:18 am)

As john said “Tracing can be performed over DOM structures” which it’s the most important thing to speed up our daily life.

—-

I can’t stop thinking a GL backend for cairo, asyn sqlite API and this TraceEverythingMonkey, is it good enough to kill silverwhat?

Perry (September 4, 2008 at 1:20 am)

=( so sad to see that IE isnt around much for the race, they either crashed… or just simply ignored/forgotten. =\

Steven Livingstone (September 4, 2008 at 1:54 am)

Funny, coz i asked about this issue with no DOM support in V8 and its result in overall performance.

http://tinyurl.com/6qmlsn

Good to see the benchmarks highlighting this. Still looking for a solution to scalable DOM parsing using a JavaScript engine on the server tho’.

Markus.Staab (September 4, 2008 at 2:19 am)

which version of the V8 engine is included in the current chrome download? 0.2.4?

Lars Gunther (September 4, 2008 at 3:42 am)

What about features. Does V8 supoort getters/setters? Array and string generics? Array extras? Etc. Has anyone tested this?

Valeri (September 4, 2008 at 4:00 am)

Please does who use Dromaeo 2 – take a look at the individual tests. According to my results in FF 3.0.1 and Chrome, Chrome is performing better in almost all pure JavaScript tests. Usually below 50% of the Firefox time. Dom manipulation shows strange patterns :(

Chrome:

# getElementById (not in document): 0.00ms ±0.00%

# getElementsByTagName(‘div’): 170.60ms ±3.16%

# getElementsByTagName(‘p’): 170.00ms ±2.92%

# getElementsByTagName(‘a’): 170.60ms ±3.66%

# getElementsByTagName(‘*’): 173.00ms ±4.31%

# getElementsByTagName (not in document): 87.60ms ±2.57%

Firefox:

# getElementById (not in document): 0.00ms ±0.00%

# getElementsByTagName(‘div’): 1.00ms ±0.00%

# getElementsByTagName(‘p’): 1.00ms ±0.00%

# getElementsByTagName(‘a’): 1.00ms ±0.00%

# getElementsByTagName(‘*’): 1.00ms ±0.00%

# getElementsByTagName (not in document): 0.00ms ±0.00%

That is slow…

and while looking mostly at jQuery’s result (because I’m using jQuery in my applications):

DOM Attributes (jQuery) – faster

DOM Events (jQuery):111.60ms

jQuery – trigger: 106.60ms ±94.06 – SLOW

DOM Modification (jQuery) – faster

DOM Query (jQuery): – overall faster

jQuery – *: 159.60ms ±157.59% slower

DOM Style (jQuery): 417.80ms

jQuery – .toggle(): 322.80ms ±246.68%

DOM Traversal (jQuery):

jQuery – prevAll: 1059.80ms ±2.85%

jQuery – nextAll: 1207.20ms ±4.80%

and

jQuery – siblings: 2202.40ms ±2.14% (sum them ;))

@John Resig and Chrome Team

Please do something about it. Just a few tweaks for the win.

Emanuele Ruffaldi (September 4, 2008 at 4:53 am)

I have put a list of implementations of various programming languages Language X -> Language Y called LanguageMix. It can be useful to look at all variations around.

http://www.teslacore.it/wiki/index.php?title=LanguageMix

Thanks for your great analysis

Emanuele

scape (September 4, 2008 at 8:26 am)

the independent processes that chrome utilizes say it all…unless there is a complete revamp of firefox or another popular browser, nothing will compare to chrome’s reliability…fast or not, nothing is more aggravating than a pandora tab taking over the browser

Edward Bespalov (September 4, 2008 at 9:21 am)

One of my tests run locally gives the following results:

FF3 – ~9sec

IE6 – ~6sec

Opera – ~7sec

Safari – ~5sec

Chrome – 60-80(!)sec

There is almost no javascript there.

You may try it from my site: http://tedbeer.net/test/gridColFreezeRowSplit.html

Also webkit has bad responsiveness with some CSS styling – compare vertical scrolling in Safari/Chrome and other browsers.

You may use “make it fast” and “make it slow” buttons for Safari/Chrome.

Ivan (September 4, 2008 at 10:06 am)

So begin the marketing wars…

@Ken, John is anything *but* independent, as he works for Mozilla.

“We already see TraceMonkey (under development for about 2 months) performing better than V8 (under development for about 2 years).”

I would say Firefox has been under development for about 5 years and Tamarin (tracemonkeys core isn’t it?) even longer still. Oh no! Now Chrome wins teh enguneering warz!11!11

Can we just appreciate the speed increase all around? I don’t see the Google engineers slamming FF performance anywhere. I’d rather see a post on the science behind tracemonkeys pending recursion optimizations.

Even more interesting would be someone posting a demo of mod_v8 or mod_tracemonkey :)

Sami Khan (September 4, 2008 at 11:18 am)

Good luck kicking Chrome to the curb. Chrome is Webkit with a few tweaks, Webkit or its ancestor has been around for a very long time. So again Google is building on a lot of other people’s work (especially the Open Source community, as Apple did) — there is little original Google work there! Yes it’s faster now, will it be forever, I doubt it… The other guys will quickly catch up, they have to (so the competition is definitely worth it)! Game on fellas!

Ray Cromwell (September 4, 2008 at 11:55 am)

John,

There is another more holistic benchmark at http://api.timepedia.org/benchmark that I’ve thrown together. Shows 2x performance deficit for TraceMonkey vs Chrome/SquirrelFish.

-Ray

Tjerk Wolterink (September 4, 2008 at 3:55 pm)

We only talk about speed here, but isn’t there a tradeoff between speed and memory?

Often problems can be fixed by different algoritms but the fast algolrithms often use more memmory than the slower algorithms. (Time complexity vs Space complexity)

Memory may not matter that much for browsers,

but when choosing a javascript engine for your

application the space complexity may also be an important factor.

So i wouldnt call the fastest engine the best engine (per se).

However i wonder wether the speed improvements actually result in a higher memory usage.. can somebody elaborate on that?

jimeh (September 5, 2008 at 12:23 pm)

Hey, your results from Dromaeo looks a bit odd to me. according to the tests i’ve done Chrome is almost 5 times faster than Safari 3.1.2 according to Dromaeo. I’ve gotten similar results on other machines too, and most of my friends are telling me the same thing in they’re tests too.

Also, I managed to test the WebKit Nightly working on Dromaeo and get some impressive results, second fastest behind Chrome.

Here’s the details for my own testing:

http://blog.zydev.info/2008/09/03/javascript-performance-google-chrome-firefox-safari/

Paolo (September 5, 2008 at 2:29 pm)

Your article and your graphs are very interesting. Thank you for your publication.

Callum (September 6, 2008 at 11:02 am)

@Jesse Casman: I’ll try to explain as simply as possible (but sorry if it seems patronising).

There are two different considerations with the perceived “speed” of a website.

1. DOWNLOADING: how long it takes for your computer to download a web page. Note that a web page is made up of an HTML document, plus often several other files such as images, stylesheets, and JavaScript files. These are all just computer files, with a size in kilobytes, and they take a certain amount of time to get to your computer. Hopefully, downloading all these pieces should take your computer less than a few seconds (but it all depends on [a] the speed of the web server in responding, [b] the total size in kilobytes of all the pieces of the page, and [c] the speed of the connection between you and the server).

2. RENDERING: The speed of your computer in *rendering* the web page. Once your computer has downloaded the web page, it’s up to your web browser to read the code and put all the pieces (text, images etc.) together and display it on your screen. This does takes a bit of time. For simple “static” web pages, it’s no big deal, because it’s a very simple job for your web browser to render (display) the page. But on websites with a lot of JavaScript, it’s a big deal: JavaScript is EXECUTED (run) inside your web browser on YOUR computer. JavaScript is basically the thing that does the nice little dynamic effects in a web page. So, one thing that affects this is your computer HARDWARE (your processor speed, and to some extent your RAM). Another is how efficient your SOFTWARE is — in particular, the JavaScript engine that your web browser uses.

To understand the difference, consider what happens when you click the “More info” link in a YouTube video description box. This doesn’t involve any network activity; it’s just your web browser running a little script, which in this case says “show a bit more of the description and the tags”. This all happens inside your computer. This is a very simple example; it seems to happen instantly. But with more complex scripts, the speed of execution does make a notable difference. If we write over-complex scripts, our web pages seem jerky and awkward to use. But as the web browsers compete to make more efficient JavaScript engines, we web developers can get away with making more complicated JavaScript code, making our web applications more interesting and powerful and interactive.

That’s the gist of it. So yes, the speed of your browser’s JavaScript engine does matter, but it’s true that most users don’t *consciously* notice it.

Seraph (September 7, 2008 at 4:58 am)

I’m really curious to the circumstances surrounding your dromaeo test, you must have rigged the hell out of the dromaeo test to get that kind of result. Did you forgot that anyone can actually go to the dromaeo website and do a test themselves? This is my result comparing Chrome to TraceMonkey :

http://dromaeo.com/?id=25766,26382

RyanVM (September 7, 2008 at 9:32 am)

You didn’t run the full testsuite. jresiq’s post linked to the v2 benchmark, which is what he used. You didn’t. Read his post more carefully next time.

charly (September 7, 2008 at 12:58 pm)

Sorry for this off topic questions, but I wondered which programm you use for these benchmark images. Thanks in advance, charly

Humble Lisp Wheenie (September 10, 2008 at 11:49 pm)

It is nice that JS engines are finally getting a deal of mindshare for optimizations and such… but why nobody really takes an SBCL or Larceny or Bigloo and writes a JavaScript front-end for it? It would take less effort, and give us performance Lisps have for about 20 years now.

Real-time GC? Just-in-time compilation? Tracing? Direct-threaded program representation? For lispers, all of this is ‘been there, done that’.

“If you don’t understand Lisp, you are doomed to reinvent it.”

Maurice (September 15, 2008 at 1:11 pm)

We’ve done some testing on our http://www.taskwriter.com application.

The results shows Chrome being not far from Firefox 3.0 (actually really close), and IE 6.0 being the slowest :).

Paul Irish (September 16, 2008 at 8:35 pm)

For reference’s sake, here’s a video showing the jquery ui effects suite executing sequentially in Chrome and Firefox 3: http://www.youtube.com/watch?v=QmRnJ-eGOlY

Balint Erdi (September 17, 2008 at 1:55 am)

My tests show Google Chrome (V8) with a huge lead to other browsers. However, what I conclude from the comments to this post and other articles one can basically declare any browser victorious. It is just a matter of using the “appropriate” test suite and benchmarking system and setting the “right parameters right”, isn’t it? :)

James Snyder (September 18, 2008 at 11:24 am)

Just wanted to add an additional test point for the latest WebKit nightly, which completes the Dromaeo benchmark for me. This is on a MacBook 2.4 GHz, 4 GB RAM, run back-to-back (similar minimal background conditions):

Safari Version 3.1.2 (5525.20.1):

Total Time: 8440.20ms

http://dromaeo.com/?id=42326

Safari WebKit Nightly 36519:

Total Time:5921.40ms

http://dromaeo.com/?id=42324

Also for V8 Benchmark Suite:

3.1.2:

Score: 193

Richards: 109

DeltaBlue: 155

Crypto: 160

RayTrace: 260

EarleyBoyer: 385

Nightly:

Score: 1022

Richards: 1061

DeltaBlue: 780

Crypto: 2200

RayTrace: 390

EarleyBoyer: 1569

So, SquirrelFish is no slouch :-) Seems like V8 is faster for some things, SquirrelFish is faster for others. It would be nice to have a benchmark suite that rolls all these results together since they all seem to hold some bias, or at least particular JS engines seem to be optimizing for one set of benchmarks.

Bernard (September 27, 2008 at 11:11 am)

Tests for DOM manipulation are difficult to measure since, even if you can modify a DOM structure quickly programmatically, it might not *render* as quickly. See for yourself:

Open google maps in Firefox and in Chrome. Try “dragging” the map left and right quickly for a moment and feel the difference: Firefox is fluid and Chrome is slow/choppy.

This is a user-perceived difference. It would be nice to be able to “measure” this programmatically, but I do not know how one could do that.

B.

Venkatesh (September 27, 2008 at 11:17 am)

Here’s the situation. My friend dropped a shocking bomb by telling me I was applying late to colleges. I had a very very slow =10kbps connection. I had to check the deadline for the admission.

I am used to firefox. I opened with it. Not even 10% of the page loaded. Then I used chrome, the full page loaded. After reading this article, I opened the page in IE. I think IE is fastest then chrome then firefox. Coz I opened IE at a later time the connection speed would have been different.

caiden (October 22, 2008 at 5:27 am)

I was initially very excited about Chrome being 10 times faster on javascript. As usual with any such claims (not just from Google), they are skewed.

Hellas (December 21, 2008 at 5:57 am)

Here is yet another browser speed test

and chrome also wins

http://www.evilscience.org/internet-explorer-vs-firefox-vs-opera-vs-chrome-vs-safari/

jQuery (December 27, 2008 at 2:31 am)

Chrome looks preaty fast with javascript. I was looking for some methods on how to test your own custom code and keep finding benchmarks. So I took couple of them and Chrome looks 10x faster then IE7 every time. Some test are showing 100x I don’t know if it’s my antivirus doing something to IE or it is just how IE is slow :)))

Anyway for those who came to this article looking for your own custom javascript benchmarking and performance testing here is a nice article:

http://jquery-howto.blogspot.com/2008/12/how-to-test-javascript-performance.html

Blaisorblade (January 17, 2009 at 9:47 pm)

> It is nice that JS engines are finally getting a deal of mindshare for optimizations and such… but why nobody really takes an SBCL or Larceny or Bigloo and writes a JavaScript front-end for it? It would take less effort, and give us performance Lisps have for about 20 years now.

> Real-time GC? Just-in-time compilation? Tracing? Direct-threaded program representation? For lispers, all of this is ‘been there, done that’.

> “If you don’t understand Lisp, you are doomed to reinvent it.”

That might be true but it doesn’t apply here.

About Lisp: the authors of V8 have long time experience on VMs (for Smalltalk, Self, Java). People who worked on Hotspot (specifically, Lars Bak) work there. Nobody claims to have invented anything new.

Also, given enough manpower, starting from scratch with known techniques instead of retargeting existing systems might be better. Different languages are not equivalent.

About memory consumption: dunno about the libraries, but JS objects should be much smaller in V8 than everywhere else, except Squirrelfish Extreme that implemented the same techniques; in fact, a JS object by definition has the semantics of a hashtable, but using maps they have the memory consmption of a C++/Java object (Java is more accurate, since also the class is present at runtime and field names are present at runtime, unlike C++).

They were developed for Self, and needed some enhancement for JS.

Another correction is that V8 itself has minimal native libraries and writes as much as possible in Javascript for easier maintenance.

About tracing: I’ve heard some debate on some problems right there, namely about how to discard old traces when memory is low. When I heard of it, the idea was to wait more info about how to solve this before implementing them.

Btw, I just passed a course on these topics (in VM construction in general) from V8 authors themselves, so I studied the details, and I may of course be biased.

Septicemia (February 13, 2009 at 6:52 pm)

Great research you’ve done there. I never really gave it much thought, but this seems impressive. Chrome FTW!

biggazlad (February 28, 2009 at 9:29 am)

Just downloaded the latest version of Chrome today and it’s performance running a page that contains some JS to bubble sort an array of strings is diabolical (the JS itself could be improved no doubt but if it works quick enough in IE and FFox…). By diabolical, I am talking 25 seconds here compared with 1-2 seconds in other browsers.

4 for-each loops, one of which has a single for-each nested within. I can easily imagine more intense iteration.

Granted, it does seem a little quicker to render static markup but V8??? V-twin morelike.

coderextreme (February 28, 2009 at 10:23 pm)

Now that Safari 4.0 beta is out, how about rerunning the tests on that?

Or point me to a page which does the comparison.