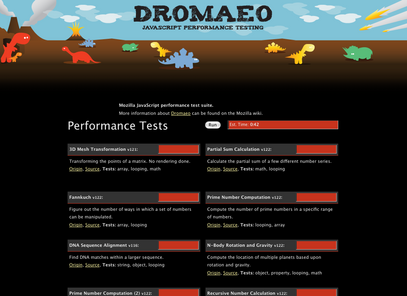

Dromaeo is the name that I’ve given to the JavaScript performance test suite that I’ve been working on over the past couple months.

I was hoping to hold off on this release for another week or two, while I finished up some final details, but since it’s been discovered, and about to hit the Digg front page, there isn’t a whole lot that I can do to stop it.

There’s a ton of details concerning how it works, and how to use it, on the Dromaeo wiki page. I won’t go through too much of it here, but it should clarify most question there.

Probably the most pressing question that’ll be encountered (outside of what is answered on the wiki page) is “What is the relation of Dromaeo to SunSpider?” (SunSpider being the WebKit team’s JavaScript testing suite).

Right now I’m working very closely with all the browser vendors to make sure that we have a common-ground test suite that is both highly usable and statistically sound (not to mention providing results that are universally interesting). There are a number of outstanding concerns that’ve been raised by users of the suite, along with a number of concerns that’ve already been rectified – again, all of this is clarified on the Dromaeo wiki page. It’s of the utmost concern that this suite be as applicable as possible. It’s very likely that the core suite will be moving to a common working ground where all browser vendors can work on it.

I especially want to thank Allan Branch of LessEverything who provided the awesome design for the site. It’s like he tapped into my brain and produced exactly what I wanted – without knowing even it. I highly recommend them, if you have design work that needs to be done.

Mona (April 11, 2008 at 2:44 pm)

Hey, Great work but there is one thing that I was working with a library of Javascript that made a good work in FF under Windows but when I tested it in FF under Linux it gave me a horrible performance how would something like this can be managed?

John Resig (April 11, 2008 at 2:46 pm)

@Mona: Something like that all depends on the quality of the operating system, what applications are running simultaneously – and how knows what else. Not really sure what to say, specifically – other than that FF3 is going to be much more awesome.

Mislav (April 11, 2008 at 3:19 pm)

About “providing results that are universally interesting” … I can’t recall when I last did 3D Mesh Transformation, DNA Sequence Alignment, Traversing Binary Trees or Rotating a 3D Cube in JavaScript.

How about the DOM, CSS manipulation and redrawing? And excuse me if I’m being ignorant while asking for this :)

John Resig (April 11, 2008 at 3:22 pm)

@Mislav: Naturally, that is the obvious next step for these tests. The first step was to just get a suite out that could actually be used for those style of tests. The existing suites simply couldn’t scale to meet those demands. Having good DOM tests are of great concern to everyone (browser vendors, developers, library authors) – they should be arriving forthwith.

Gavin Brown (April 11, 2008 at 4:35 pm)

What is the point of creating a JavaScript benchmark that is virtually identical to the SunSpider benchmark created by the WebKit team several months ago? You use practically the same tests for pete’s sake! It would have been more beneficial foe all browser vendors to collaborate on the existing benchmark. I guess the appeal of Not Invented Here is alive and well at Mozilla.

John Resig (April 11, 2008 at 4:57 pm)

@Gavin Brown: You didn’t actually read the blog post or wiki page, did you? How about you take a gander through the methodology to get a feel for why SunSpider was inadequate. It’s important to note the difference between a testing suite and a suite of tests, as well. Dromaeo will eventually include all of the tests that are in SunSpider, plus many more. The tests that SunSpider provided were adequate, for now (which is why we’re standardizing on them) the suite, was not.

And as I mentioned in the post, we’re working with the WebKit team – along with the other browser vendors – to standardize on a suite of tests.

Mike Shaver (April 11, 2008 at 5:03 pm)

If you look at the SunSpider tests, also, I think you’ll find that many of them originated with jresig’s earlier JS performance test suite, and others were copied from other sources. I’m not sure there are any original tests in SunSpider; the innovation there was really just how it was run, and how the repetition count was normalized.

http://ejohn.org/projects/javascript-engine-speeds/

etc.

Not invented where? :)

Maciej Stachowiak (April 11, 2008 at 5:21 pm)

@John Resig

I think Dromaeo’s testing methodology has significant flaws in itself. First of all, it wraps every test in a closure, which factors out the performance of access to globals (something that actually matters for real scripts). Second, the way it determines two results are a “tie” is statistically unsound – you can’t just check if two confidence intervals overlap, you have to do a two-variable t-test, which SunSpider does correctly. Third, running a test more times if the variance is higher than some threshold creates statistical artifacts – it’s not good science to change an experiment midstream based on statistical results, and it makes it impossible to do statistical analysis on the totals since you can’t meaningfully generate 5 totals if some tests ran more than 5 times. Fourth, Dromaeo seems to report much higher variance than SunSpider, so it is likely that the harness is interfering with the execution of the tests more than on SunSpider and creating noise.

Given these fairly serious flaws (which I’ve let you know about privately), I think calling SunSpider “inadequate” is kind of arrogant. All testing methodologies may have flaws and can likely be improved. I would rather not bash Dromaeo in public but I think it’s unfair to create the impression that SunSpider is somehow deeply inferior.

Incidentally, the one place your methodology specifically cites a flaw SunSpider, I think is arguably a point in its favor. The test suite was designed to isolate JavaScript, and when I tested in Safari, the in-browser results were barely higher than the pure command-line harness. However, it’s possible in theory for the browser to interfere with the “pure” performance of the JS engine, whether for rendering reasons or otherwise. If this is happening, it is a bug in that browser, and *should be fixed*, because that is the JS performance that end users will actually experience. I believe SunSpider accurately simulates the execution of a script in initial pageload, and the fact that Firefox somehow makes this slower than other situations is a Firefox bug which was helpfully (and validly) found by the test suite.

Boris (April 11, 2008 at 5:21 pm)

Mona, if your timing included rendering and not just JavaScript, then a lot will depend on your exact video card and video driver…

Maciej Stachowiak (April 11, 2008 at 5:26 pm)

@Mike Shaver

I wrote at least two of the tests in SunSpider myself from scratch, and my colleague Oliver Hunt wrote another. 12 of the 26 tests in SunSpider were also available in John’s earlier suite, but I believe all are also available from other sources.

(Also, as you know, I originally tried to collaborate with John on his older test suite, but that did not work out.)

Mike Shaver (April 11, 2008 at 10:21 pm)

I wasn’t sure, as I said; I apologize for not properly crediting the 3 new tests. My point, which I hope was clear, was that this was not a case of SunSpider’s original test creations being ripped off by John — benchmark scripts get passed around and refactored all the time, including many taken from decidedly un-weblike sources such as the alioth shootout. In the case of 3d-cube.js, the work to remove the DOM logic from the original demo code was done by John, and he even submitted a (still waiting) patch to the SunSpider copy of the test to fix a bug he found in that DOM removal. (John’s tests credit the original sources; I don’t see anything similar in the SunSpider tests.)

I don’t share your views on the importance of progress bar rendering time during script-dominated benchmarks, which are different from what I’d understood them to be in our previous discussions about the effects of coalesced updates on SunSpider results, but I certainly respect that you hold them.

I do, though, have a hard time reconciling your belief that progress bar rendering _should_ affect ostensibly pure-JS tests with the position that “other work” being done by the harness is harmful. We know that there is inherent noise in JS tests on many (all?) engines due to GC scheduling, so the absence of any such noise would lead me to believe that tests weren’t testing a rather important aspect of real-world JS performance: the cost of keeping memory usage well-bounded, and the performance effects stemming therefrom. For example, we (Mozilla) know that we want to reduce GC pause effects, which is one reason we’re looking forward to being able to use MMGc in our engine. No isolated test or set of tests can be perfectly representative of the breadth of the web, and so relative importance of different outcomes will be reflected in different test suites.

(Your statistical complaints seem to roughly correspond to the concerns that John has listed in the wiki page linked from his post, no?)

Reading your original SunSpider announcement, in which you clearly give the impression that John’s previous tests were inadequate, makes me not feel especially concerned about the accusations of arrogance here; John may feel otherwise.

I’ve already expressed my regret — and John has his — that your single mail was left unanswered in error. It’s not a problem unique to that case — https://bugs.webkit.org/show_bug.cgi?id=17458 has been sitting for some time as well.

Maciej Stachowiak (April 11, 2008 at 11:25 pm)

Mike, I didn’t specifically accuse Dromaeo of ripping off the tests. But your implied accusation that SunSpider was a “not invented here” exercise, a rip-off of John’s old tests, or unoriginal in general was totally uncalled for, under the circumstances.

In the original SunSpider announcement, I was very careful not to call other JavaScript benchmarks, including John’s, “inadequate” or otherwise criticize them in any strong way, because I think making good benchmarks is challenging work, because we’re all in this together. My specific wording was, “none of these were quite what we needed to measure and improve real-world JavaScript performance”.

I wish that instead of doing this we could be collaborating. Arguing about specific flaws in each other’s benchmarks in public is something I didn’t want to see happen (and I believe I’ve said as much to you and John before, so I’m sad that you guys went down that road). Rather than continue in that vein, here are the things in SunSpider that I believe are original, at least in the field of JavaScript benchmarking:

1) Some of the test cases, which were written specifically for SunSpider.

2) The specific collection of tests, which was an attempt to balance across different areas of the JavaScript language. (The individual tests came from John’s older tests, the computer language shootout, test cases reported as bugs, and many sources around the web; they retain credits in the individual test files.)

3) The naming of the tests.

4) The splitting of the tests into categories.

5) The adjustment of the tests to take similar amounts of time as each other, at least in popular browsers of that era, for balance.

6) Computing 95% confidence interval error ranges based on the t test (and using multiple runs of each test).

7) Having a comparison mode, to compare two sets of results easily.

8) Determining statistical significance of differences using a two-variable t test.

9) The specific way the command-line harness works (mostly written in JavaScript with a bit of perl).

10) The specific way the browser-hosted harness works (which presents a realistic execution environment while factoring out most non-scripting factors, modulo browser bugs).

11) Making result URLs bookmarkable and shareable.

12) Making it easy for anyone to use their own copy of the harness code so there is no need to rely on a specific web site.

13) Reporting comparisons using multiples rather than percents (“1.20x as fast” instead of “17% faster”) because % is ambiguous and commonly interpreted in three different ways (if A runs in 1/5th the time of B, is it 80% faster, 400% faster, or 500% faster? it’s definitely 5x as fast, though…)

I think that is quite a bit of innovation, for a benchmark, and the combination of these things has made SunSpider the de facto standard of JavaScript benchmarking. I will gladly give Dromaeo credit for whatever in it is original, but I do wish we could be collaborating instead of sniping at each other.

Mike Shaver (April 12, 2008 at 1:29 am)

I didn’t mean to diminish the innovations in SunSpider’s infrastructure, but I can see how my “just how it was run” phrasing made it sound that way. Apologies.

We can be collaborating, and as I’ve said to you before I agree that SunSpider is a much better benchmark for JS performance than iBench. We’ll continue to send patches and reports of problems as we find them, as we do with WebKit in general, and I hope you will as well. As the wiki page indicates, John is still working on addressing concerns raised by you and other browser developers. John and I had hoped that they would be addressed before Dromaeo was widely visible, but events overtook us.

I like SunSpider, and spent a lot of time in it when working on the JS performance improvements in Firefox 3, which is why I and others encouraged John to submit patches to the tests — not that he needed much encouragement, as his record in WebKit’s bugzilla indicates. One was just left to sit, and another was rejected as being “non-representative”, pending your confirmation, so we started to think that there was value in having a test suite that reflected what our priorities were with JS performance, since as you well know it’s pretty vital to have something against which to gauge possible improvements.

Dromaeo’s innovations, or in some cases just differing design decisions, are listed pretty well in the wiki page. Some of the differences, like the use of server-side storage for results, come from the additional detail that we want in the reports, so that we can more easily use the tests as input to our continuous test system, while still being able to rev the tests themselves and not inadvertently compare apples to oranges. Oliver’s comment in https://bugs.webkit.org/show_bug.cgi?id=17634 alludes to the difficulty of doing that with SunSpider, and it was a priority for us, because we know that we’re going to want to adjust tests to rebalance them over time. (On my MBP the default run of some of the SunSpider tests are well under 100ms, some as low as 35ms, which means that the next round of optimizations will render them too fast to be very useful for profiling or tracking in our performance reporting. Revving the number of iterations, or increasing the branching factors or other scaling elements, will be easy in Dromaeo.) Earlier versions used URLs for comparison, but they quickly became just too huge.

Doeke Zanstra (April 12, 2008 at 4:53 am)

@John: on my (a bit old) iMac G4 700Mhz and 1 GB memory, I have problems running the tests in Firefox 2.0.0.13. FF keeps coming with the message “unresponsive script/stop script – continue”. I don’t have this problem with Camino or Safari…

timothy (April 12, 2008 at 11:26 am)

Does anyone remember the PC video card wars? The design of benchmarks can be very contentious, because it’s hard to make a benchmark that captures “real world” issues. Video card makers started tuning toward the benchmarks even when it may have slightly hurt real-world cases.

But that’s OK, I still think benchmarks can help the whole market get faster.

For a JavaScript benchmark, I think variety is key. You want to hit as many of the features of the language as you can. If something is left out, it may be slighted by the people doing the language implementation.

Mike Branski (April 13, 2008 at 12:47 am)

All functionality and above debates aside, I think it’s awesome that the dinosaurs move independently of each other when you re-size the browser.

Scott Johnson (April 13, 2008 at 1:35 am)

This thing really kills IE6! Wow, it ran in under 2.5 seconds on FF3.0b5 on my PC. IE6 at up a core and ran for about 10 minutes before I killed it…and it had only completed one of the tests.

Mike Branski (April 13, 2008 at 2:34 am)

@John: Two cosmetic notes: In IE6 the page never finishes loading; it’s getting hung up on http://dromaeo.com/images/comets.png. Also, all the tests show up under the first column instead of being two columns like in Firefox. Neither issue are present in IE7.

@Scott Johnson: Same here. Take a look at the MozillaWiki at http://wiki.mozilla.org/Dromaeo#Running_the_Tests. It says the DNA Sequence test can take up to 15-20 minutes in IE6/7, which might explain your issue.

Saptarshi (April 13, 2008 at 5:59 pm)

I have been following Dromaeo for about a month now and its been a very consistent JavaScript benchmark. Also, perfectly timed to showcase the good performance of Mozilla with Firefox 3.

I actually wrote a post about it last month comparing different browsers and their results. Webkit Winning in Dromaeo – Mozilla’s JavaScript Benchmark Alas..it didn’t catchup with the Digg crowd :))

Maciej Stachowiak (April 14, 2008 at 8:41 pm)

@Mike Shaver

Apologies accepted.

Scott Johnson (April 15, 2008 at 2:22 pm)

@Mike Branski: Honestly, I’m not all that interested in seeing the results from IE6/7. I know they’re slow. I just didn’t realize that they’re that slow. There’s no way I’m waiting 15 minutes for a test to run. I have more important things to do with my time. Thanks for being faster, Firefox! :)

Mahbook Qwada (June 4, 2008 at 3:22 pm)

@John

Had a few questions regarding the test methodology you have used:

– What is the criteria you have used to select the subset of existent tests from SunSpider? While SunSpider talks about being real-worldish, these tests are a subset of the same and would just be a subset of real worldish.

– Specific inclusions,like Base 64 Encoding and Decoding seem to be overemphasized: This test alone takes 50% of the net DROMAEO execution time for IE8 Beta1. This number is 20% for SunSpider.

– Is Base 64 Encoding and Decoding worth a 50% weightage to represent all real world javascript scenarios? How do you decide these weightages across tests/browsers.

– When could we expect more additions to these tests so that this test represents the real state of the javascript performance picture across browsers

– This is just javascript performance – Any plans to measure overall real world AJAX performance (which you would agree matters more)